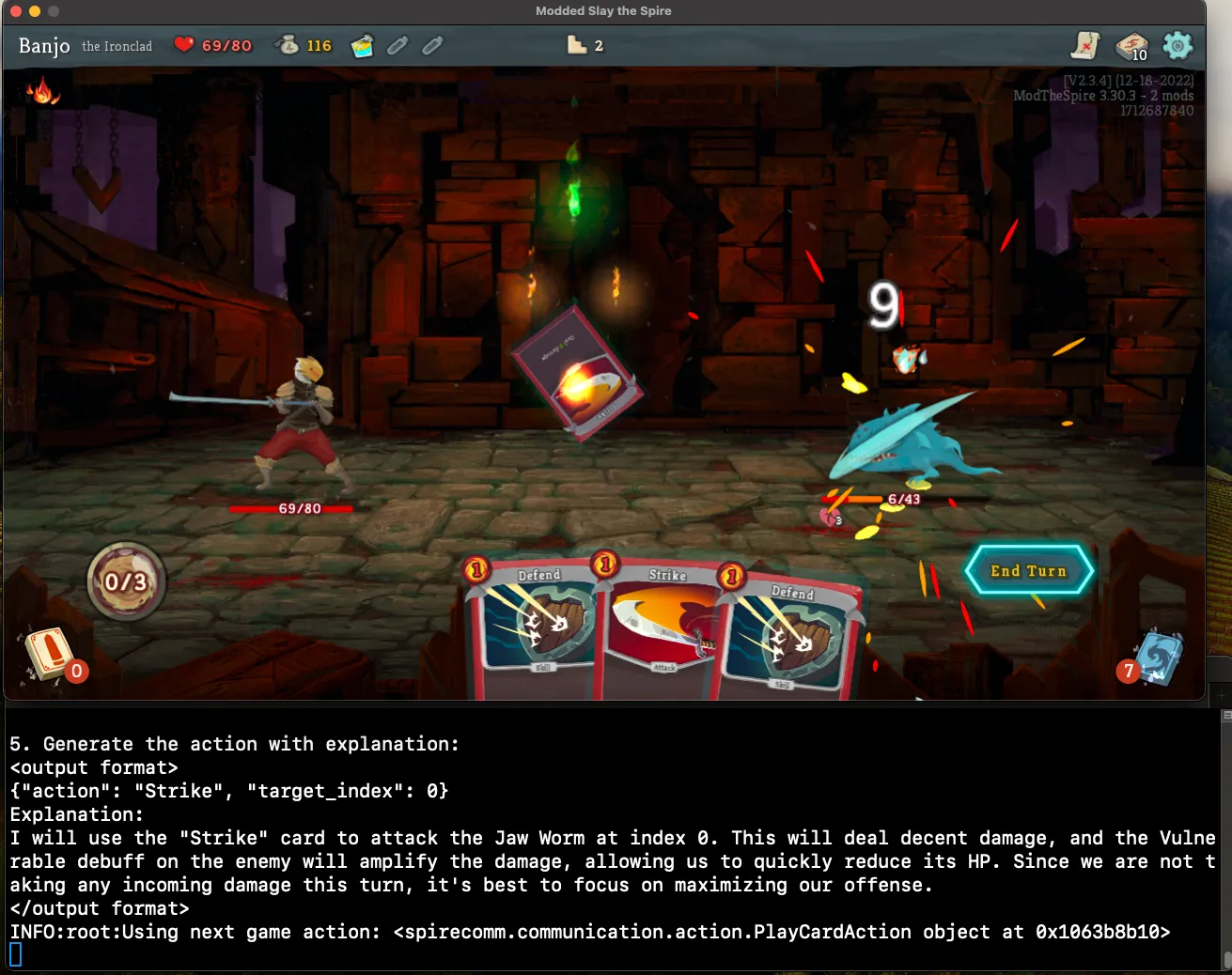

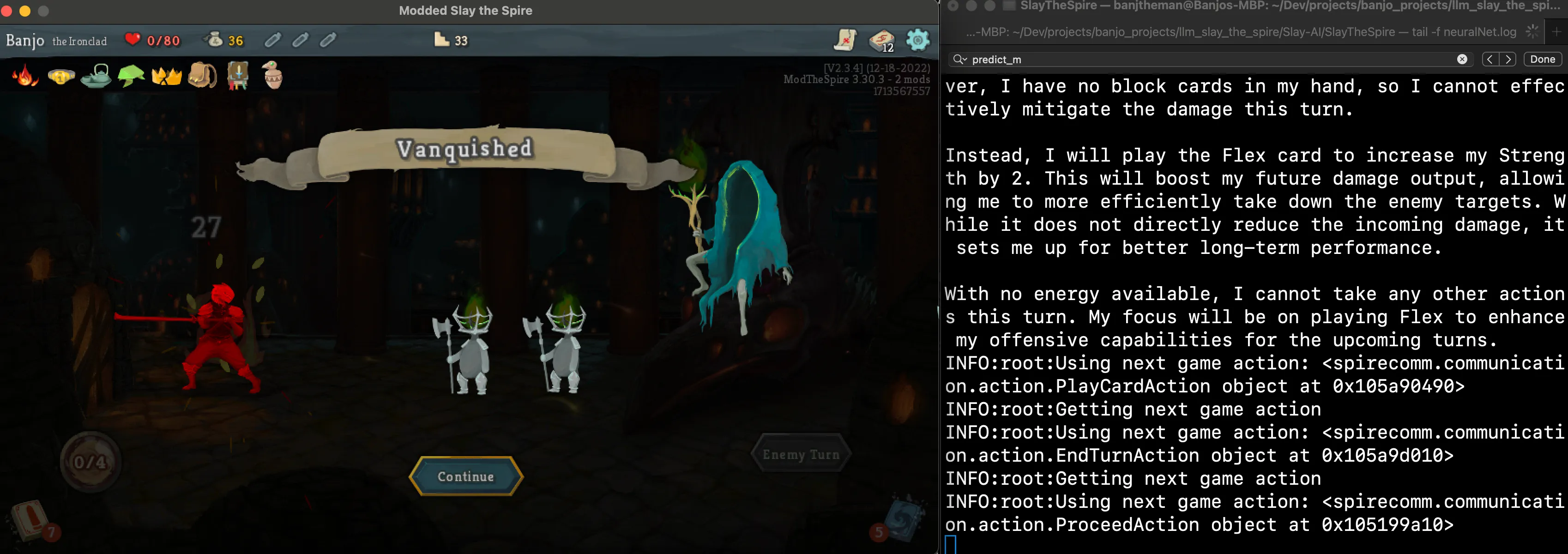

I Built an LLM Bot in 3 hours to Conquer Slay the Spire

Learn the Methods I Used with Amazon Q to Build in Record Time

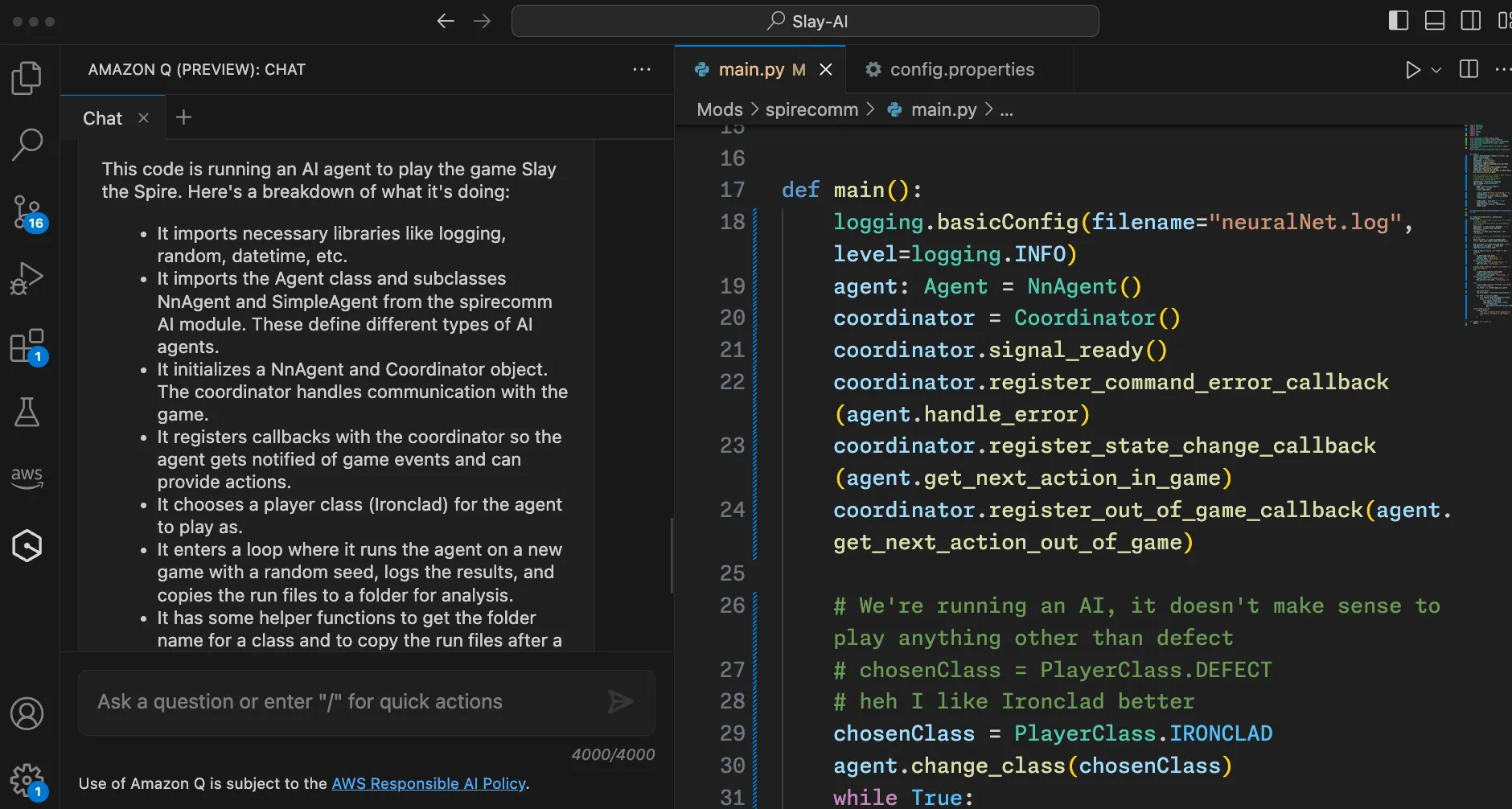

main.py. To gain a high-level understanding of the code's functionality, I turned to Amazon Q, asking it to explain the code with a simple prompt: "What does this code do?"

It imports the Agent class and subclasses NnAgent and SimpleAgent from the spirecomm AI module. These define different types of AI agents.

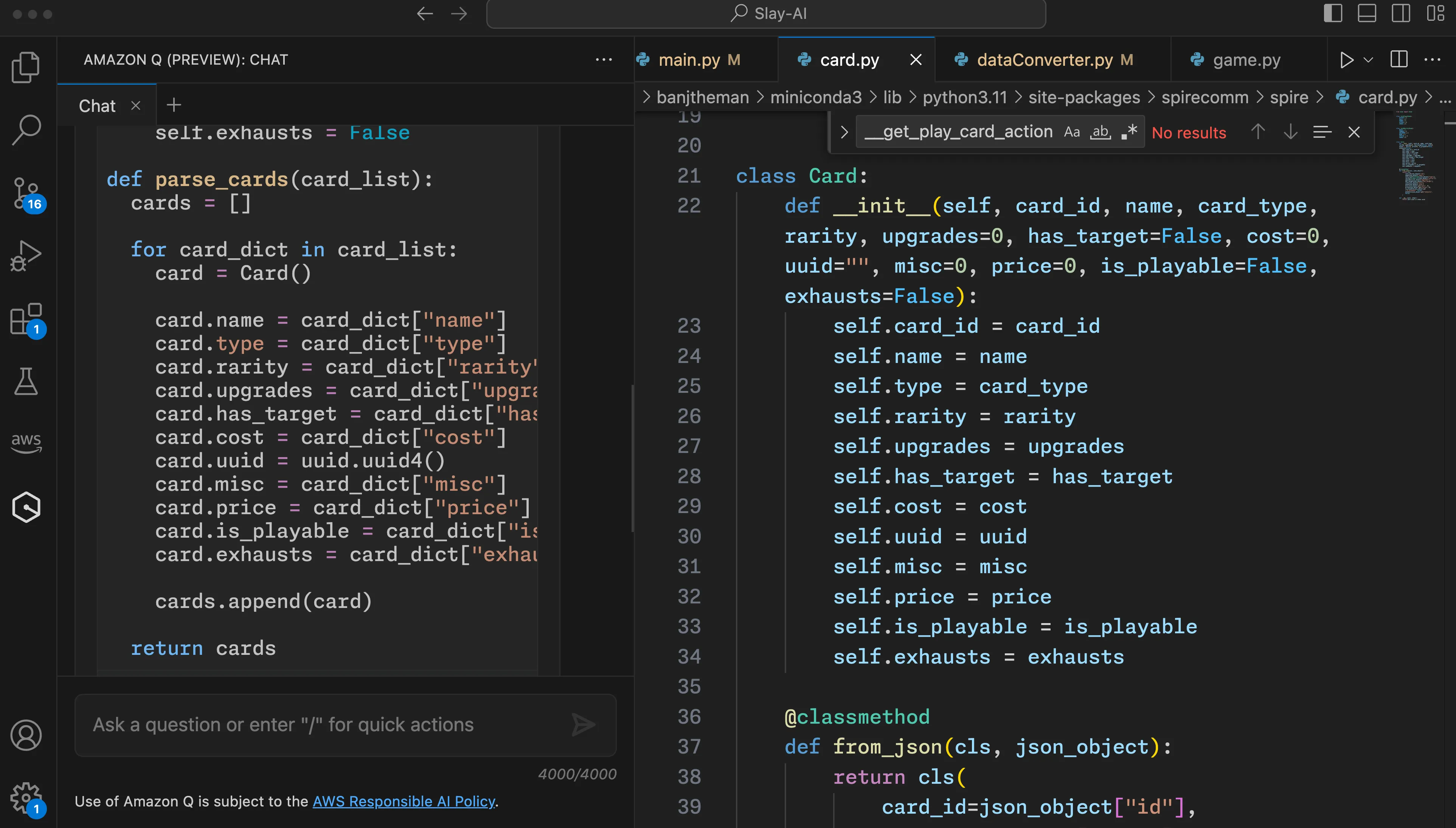

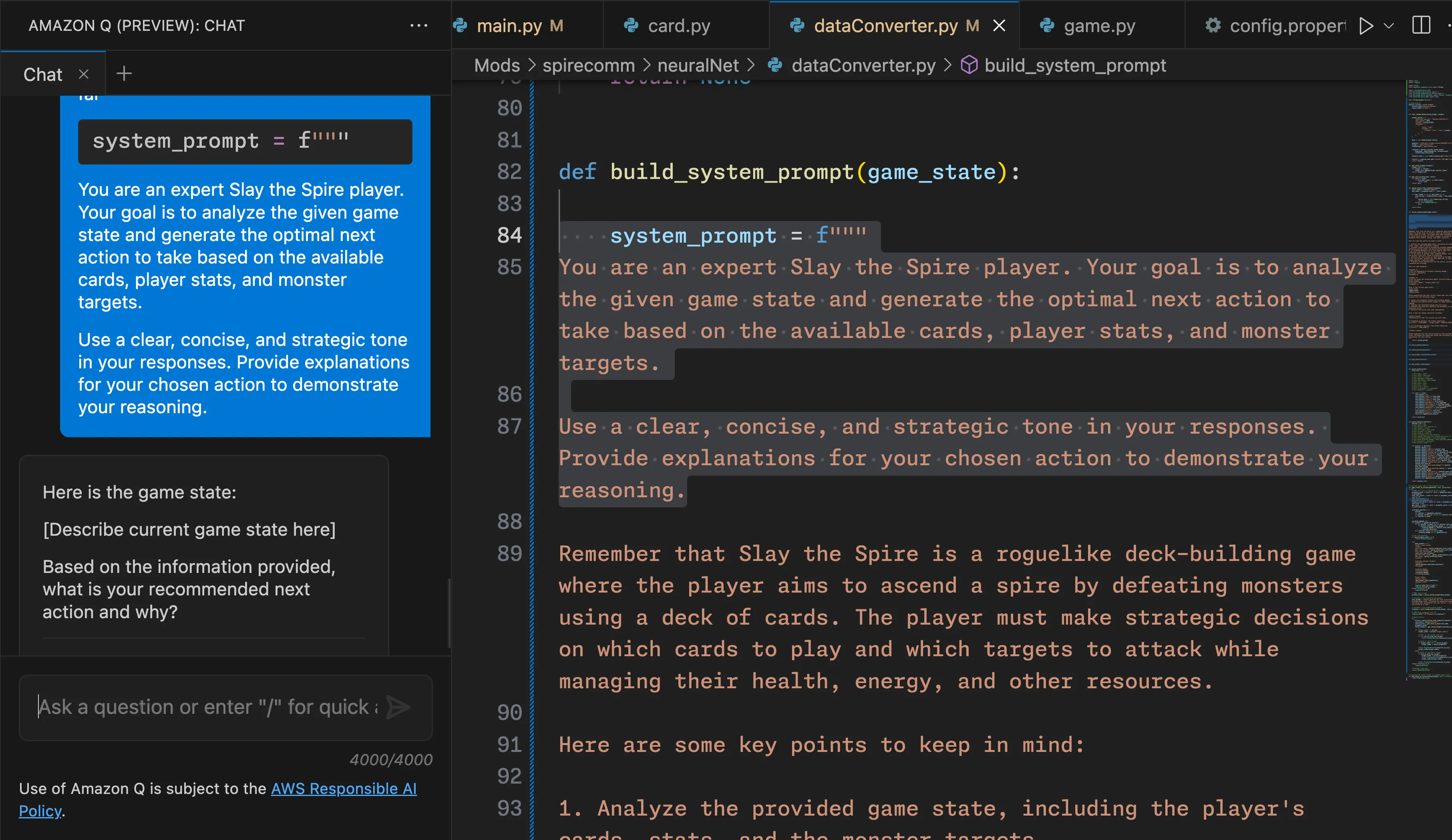

SimpleAgent class, which uses rule-based heuristics to determine actions, such as playing zero-cost cards first or using block cards when facing significant incoming damage. My goal was to replace these if-then statements with an LLM call that takes the game state data as input and returns the next action to take.SimpleAgent served as a good starting point, so I investigated how the NeuralNetAgent class worked. To my surprise, it was completely empty, only calling the super() method of the base Agent class. Instead of creating a new agent class from scratch, I decided to update the predefined one with my own logic. However, I encountered a challenge: the game information was stored in classes without functions to convert them into strings for my LLM prompt.

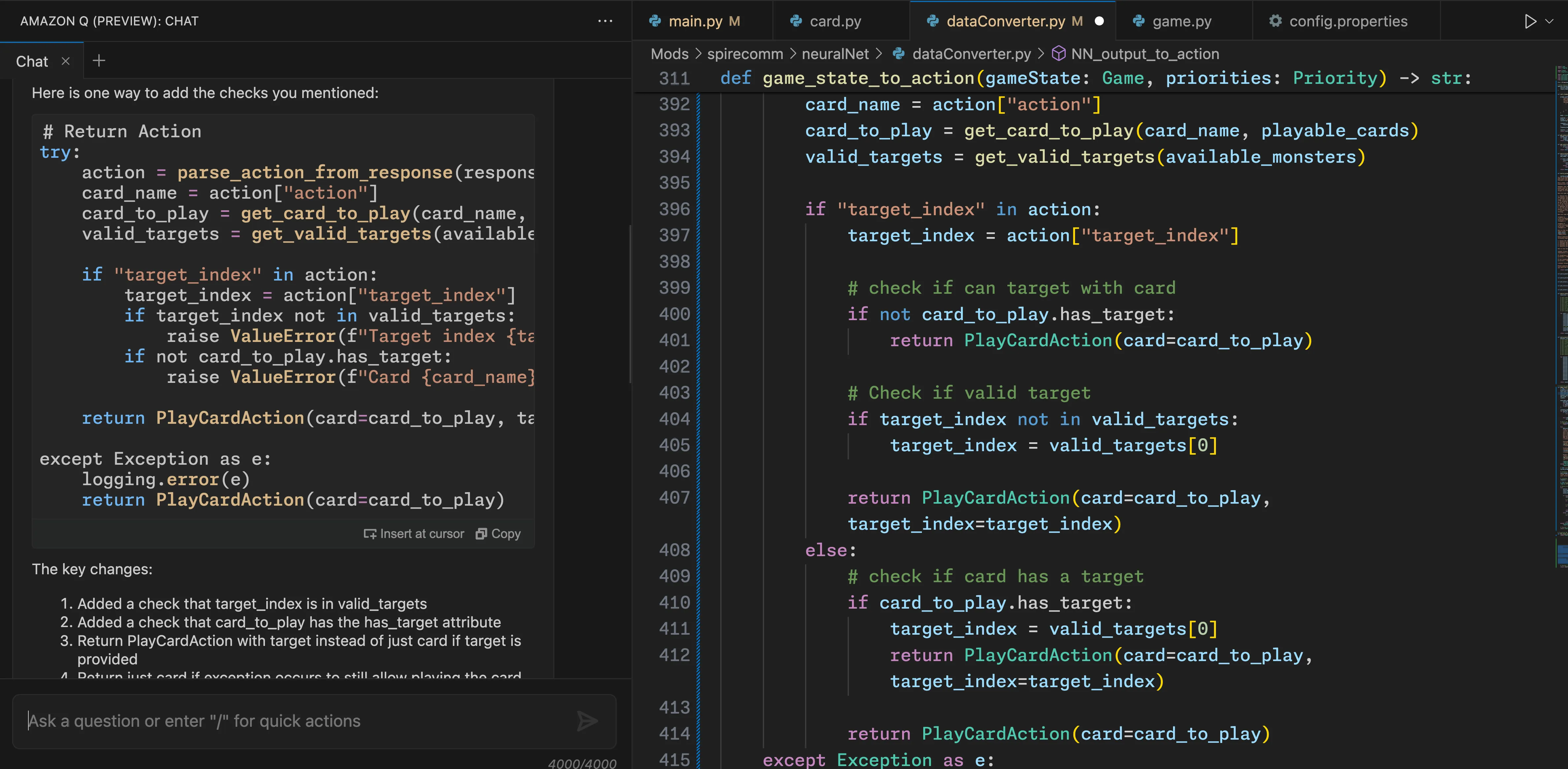

Can you fix this code, so it checks if the target_index is in valid_targets, and if the card_to_play.has_target == True? I was getting errors in my code by not having correct error checking.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.