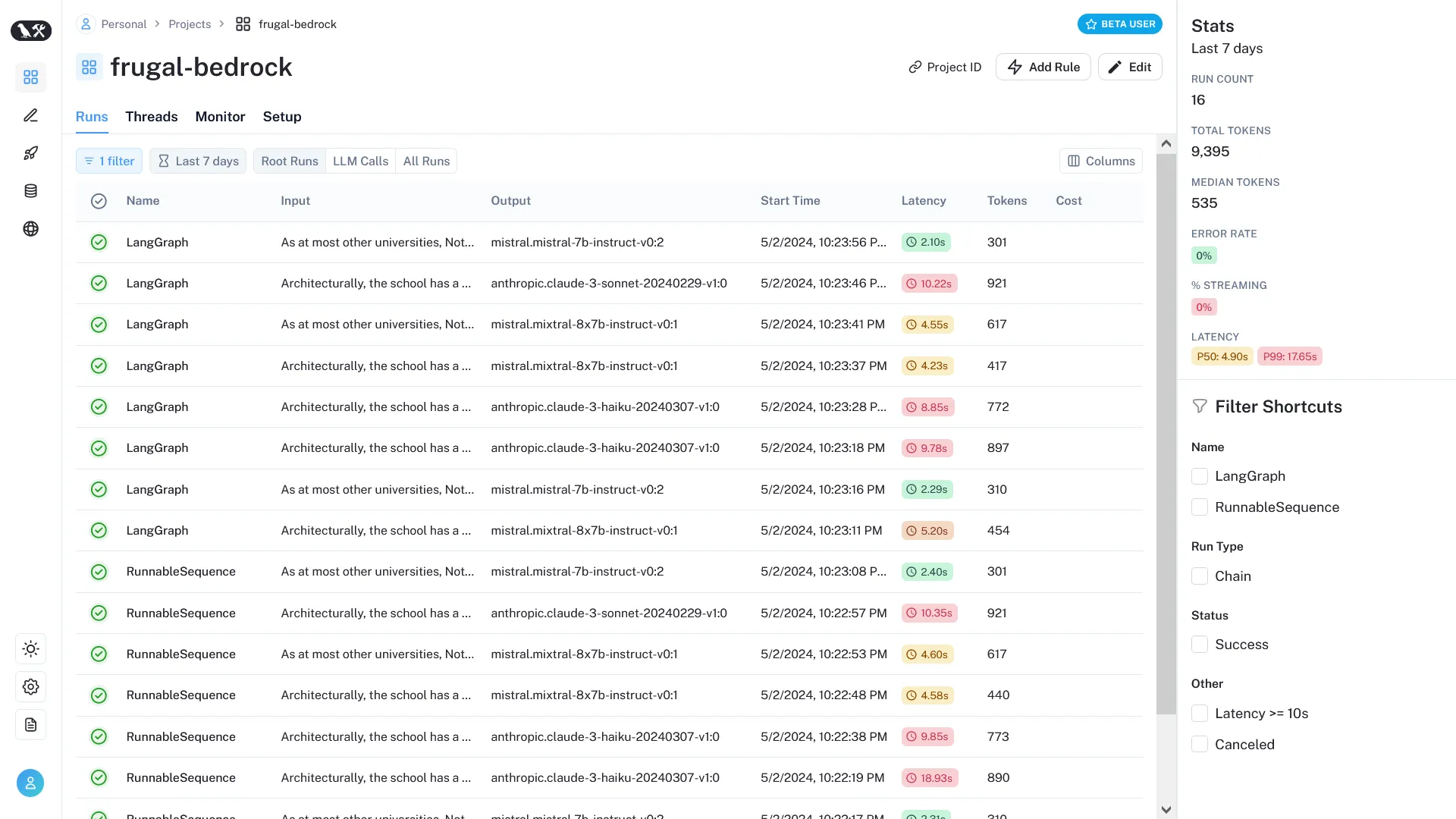

Fighting Hallucinations with LLM Cascades 🍄

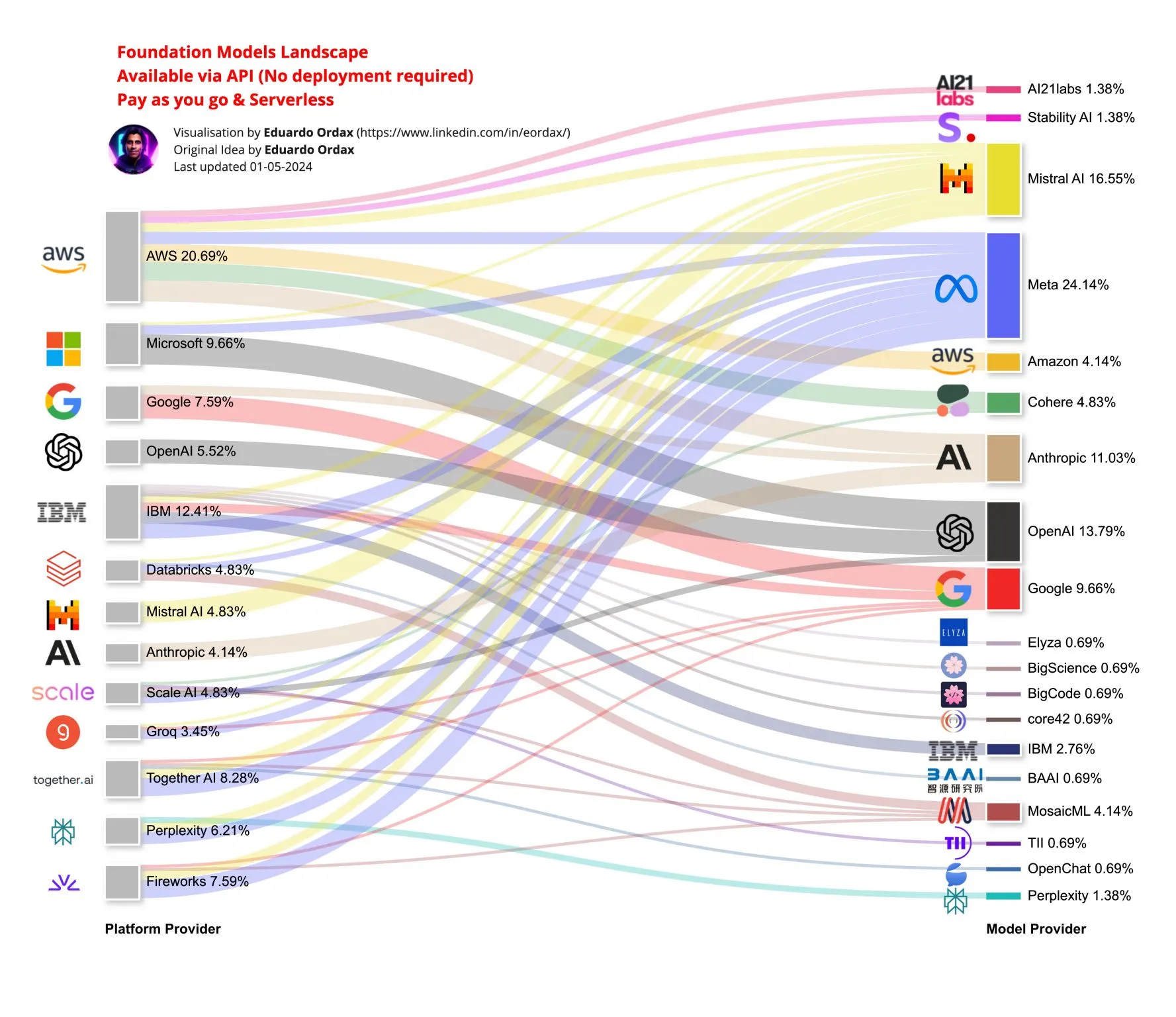

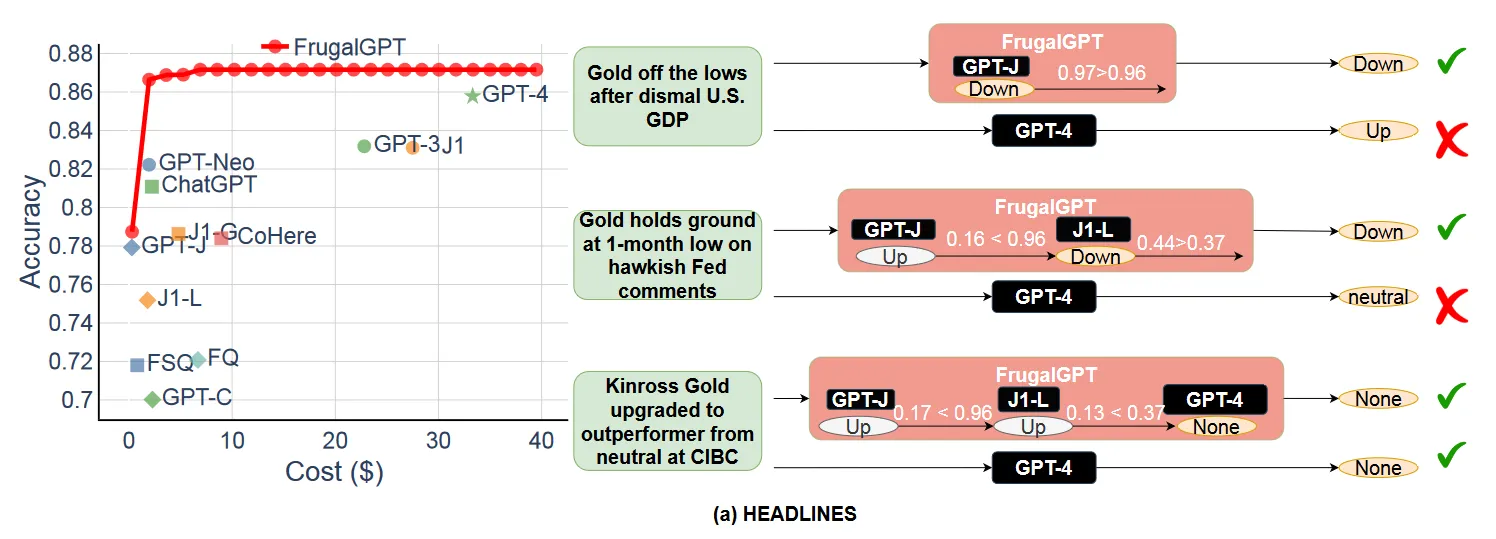

Learn how to implement FrugalGPT-style LLM cascades on top of Amazon Bedrock using LangChain and LangGraph without breaking the bank.

"Everything you are comes from choices" ―Jeff Bezos

💡 If you're looking for guidance, AWS Community has some great posts on how to handle this decision. See, for example, How to choose your LLM by Elizabeth Fuentes and Choose the best foundational model for your AI applications by Suman Debnath.

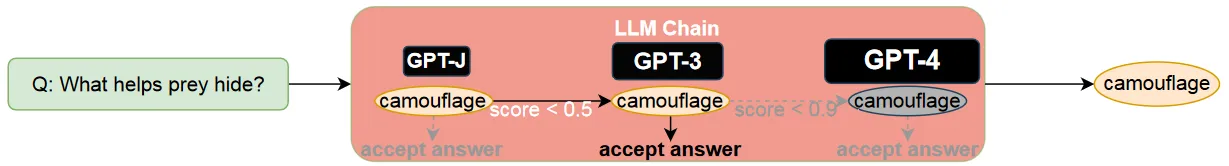

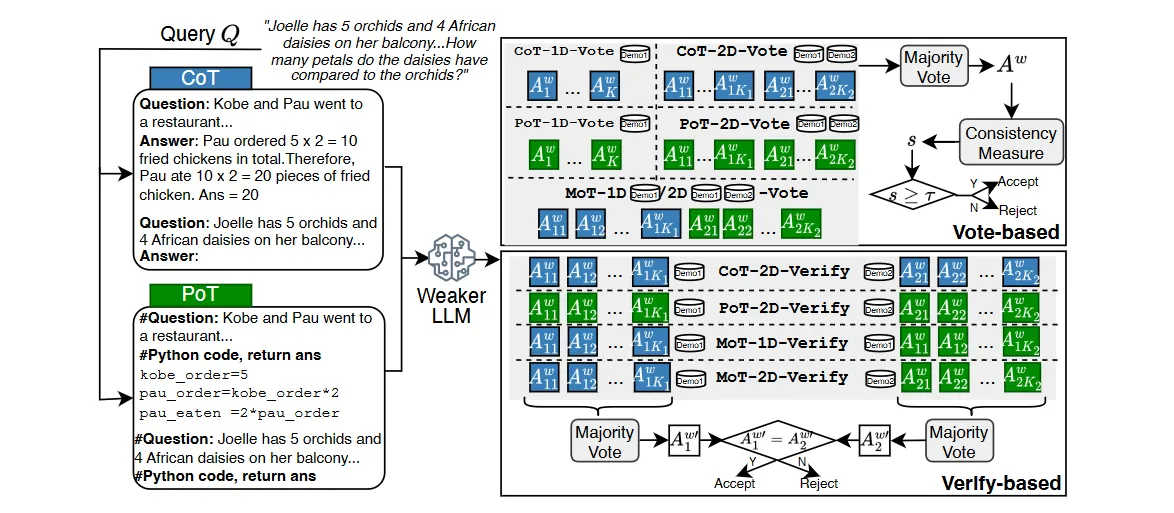

- a scoring function which evaluates a generated answer to a question by producing a reliability score between

0👎 and1👍 and - an LLM router that dynamically selects the optimal sequence of models to query

- Invoke a model on the list to generate an answer

- Use the scoring function to evaluate how good it is

- Returns the answer if the score is higher than a given threshold (which can be different for each model)

- Otherwise, it moves to the next model on the list and goes back to step 1

👨💻 If you're interested in the original implementation, the code is available on GitHub.

"(...) real-world applications call for the evaluation of other critical factors, including latency, fairness, privacy, and environmental impact. Incorporating these elements into optimization methodologies while maintaining performance and cost-effectiveness is an important avenue for future research."

"Intuitively, it is very challenging to evaluate the difficulty and the answer correctness of a reasoning question solely based on its literal expression, even with a large enough LLM, since the errors could be nuanced despite the reasoning paths appearing promising."

Note for the brave ones: 🧐 While MoT-based LLM cascades are well beyond the scope of this post, the curious reader is strongly encouraged to read the paper.

💚 Frugality: Accomplish more with less. Constraints breed resourcefulness, self-sufficiency, and invention. There are no extra points for growing headcount, budget size, or fixed expense.

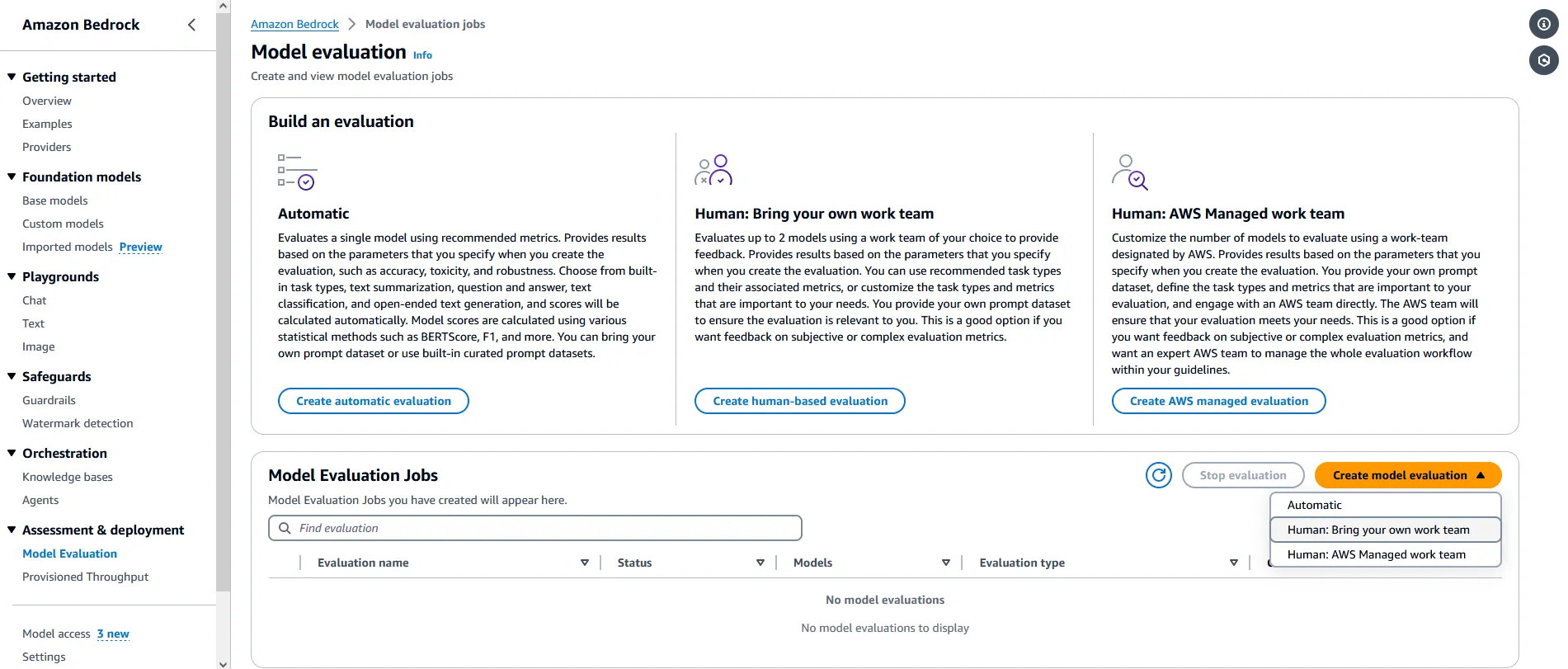

- Is the model hallucinating?

- Is the model generating NSFW content?

- How is the overall tone?

👨💻 All code and documentation for this post is available on GitHub.

🚫 TheBedrockChatclass available onlangchain_communityis on the deprecation path and will be removed with version0.3>>> Please don't use it!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

r"""

______ _ ____ _ _

| ____| | | _ \ | | | |

| |__ _ __ _ _ __ _ __ _| | |_) | ___ __| |_ __ ___ ___| | __

| __| '__| | | |/ _` |/ _` | | _ < / _ \/ _` | '__/ _ \ / __| |/ /

| | | | | |_| | (_| | (_| | | |_) | __/ (_| | | | (_) | (__| <

|_| |_| \__,_|\__, |\__,_|_|____/ \___|\__,_|_| \___/ \___|_|\_\

__/ |

|___/

FrugalGPT-style LLM cascades for fighting hallucinations.

.-'''-.

/* * * *\

:_.-:`:-._;

(_)

\|/(_)\|/

"""

import os

import json

from operator import itemgetter

from typing import (

Any,

List,

Tuple,

TypedDict

)

# HuggingFace 🤗

from datasets import load_dataset

from transformers import pipeline

# LangChain Core 🦜🔗

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import (

RunnableLambda,

RunnableParallel,

RunnableBranch,

RunnablePassthrough

)

# LangChain AWS ☁️

from langchain_aws import ChatBedrock

# LangGraph 🦜🕸️

from langgraph.graph import StateGraph, END1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# For more information, see

# https://vectara.com/blog/automating-hallucination-detection-introducing-vectara-factual-consistency-score/

hallucination_detector = pipeline(

task="text-classification",

model="vectara/hallucination_evaluation_model"

)

def scorer(inpt: dict) -> float:

"""

Returns a score between 0 and 1 indicating whether the model is hallucinating.

"""

result = hallucination_detector({'text': inpt['context'], 'text_pair': inpt['answer']})

return result['score'] # 0 -> hallucination, 1 -> factually consistent

def batch_scorer(inpts: List[dict]) -> List[float]:

"""

Scores multiple inputs in one go.

"""

inputs = list(map(lambda inpt: {'text': inpt['context'], 'text_pair': inpt['answer']}, inpts))

results = hallucination_detector(inputs)

return list(map(lambda result: result['score'], results))0 😵 and 1 🎩, where 0 means that the model is hallucinating and 1 indicates factual consistency.☝️ For more information about the model and FCS, see the Automating Hallucination Detection: Introducing the Vectara Factual Consistency Score post by Vectara.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

def build_chain(model_id: str, threshold: float, scoringf: callable = scorer):

"""

Creates a model chain for question answering.

"""

prompt = ChatPromptTemplate.from_template("{context}\n\n{question}")

llm = ChatBedrock(model_id=model_id, model_kwargs={'temperature': 0})

output_parser = StrOutputParser()

return RunnableParallel({

'context': itemgetter('context'),

'question': itemgetter('question'),

'model': lambda _: model_id,

'answer': prompt | llm | output_parser

}) | RunnableParallel({

'context': itemgetter('context'),

'question': itemgetter('question'),

'model': itemgetter('model'),

'answer': itemgetter('answer'),

'score': RunnableLambda(scoringf),

'threshold': lambda _: str(threshold)

})1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

def llm_cascade(model_ids: List[str], thresholds: List[float]):

"""

Creates a static LLM cascade for question answering.

"""

if len(model_ids) != len(thresholds):

raise ValueError("The list of models and thresholds must have the same size.")

def check_score(output):

score = float(output['score'])

threshold = float(output['threshold'])

if score < threshold:

return True

return False

chains = [

build_chain(model_id, threshold)

for model_id, threshold in zip(model_ids, thresholds)

]

llmc = chains[0]

for chain in chains[1:]:

llmc = llmc | RunnableBranch(

(lambda output: check_score(output), chain),

RunnablePassthrough()

)

return llmc1

2

3

4

5

6

7

8

9

10

11

12

13

def build_chain_lg(model_id: str):

"""

Creates a model chain for question answering (LangGraph version).

"""

prompt = ChatPromptTemplate.from_template("{context}\n\n{question}")

llm = ChatBedrock(model_id=model_id, model_kwargs={'temperature': 0})

output_parser = StrOutputParser()

return RunnableParallel({

'context': itemgetter('context'),

'question': itemgetter('question'),

'model': lambda _: model_id,

'answer': prompt | llm | output_parser

})1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

class ModelState(TypedDict):

"""

State class to pass the model output along the sequence.

"""

context: str

question: str

model: str

answer: str

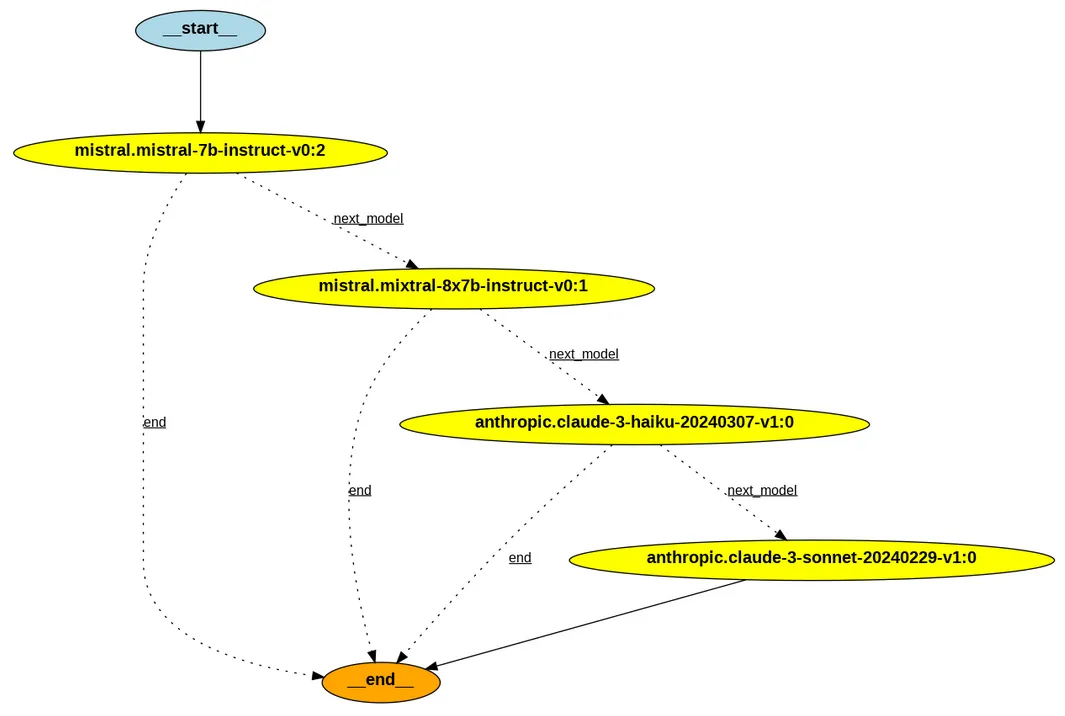

def llm_cascade_lg(model_ids: List[str], thresholds: List[float], scoringf: callable = scorer):

"""

Creates a static LLM cascade for question answering (LangGraph version).

"""

if len(model_ids) != len(thresholds):

raise ValueError("The list of models and thresholds must have the same size.")

def check_score(state: ModelState):

if scoringf(state) < thresholds[model_ids.index(state['model'])]:

return "next_model"

return "end"

graph = StateGraph(ModelState)

for i, model_id in enumerate(model_ids):

graph.add_node(model_id, build_chain_lg(model_id))

if i < len(model_ids) - 1:

graph.add_conditional_edges(model_id, check_score, {

'next_model': model_ids[i+1],

'end': END

})

else:

graph.add_edge(model_ids[-1], END)

graph.set_entry_point(model_ids[0])

return graph.compile()1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

if os.path.isfile("pricing.json"):

with open("pricing.json", encoding="utf-8") as f:

BEDROCK_PRICING = json.load(f)

else:

BEDROCK_PRICING = None

def compute_cost(response: dict, models: List) -> Tuple[float, float]:

"""

Returns the actual cost of running the chain and the

predicted cost if we were to use only the best model.

"""

end_model = response['model']

query = response['context'] + response['question']

answer = response['answer']

# Assumption: 1 token ~ 4 chars

in_tokens = len(query) // 4

out_tokens = len(answer) // 4

cost = 0

for model in models:

model_pricing = BEDROCK_PRICING[model]

cost += (in_tokens/1000)*model_pricing.get('input', 0.0) + \

(out_tokens/1000)*model_pricing.get('output', 0.0)

if model == end_model:

break

# Assumption: output tokens (LLM cascade) == # output tokens (best model)

best_model_pricing = BEDROCK_PRICING[models[-1]]

best_cost = (in_tokens/1000)*best_model_pricing.get('input', 0.0) + \

(out_tokens/1000)*best_model_pricing.get('output', 0.0)

return cost, best_costpricing.json) for Anthropic and Mistral models1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

{

"anthropic.claude-3-haiku-20240307-v1:0": {

"input": 0.00025,

"output": 0.00125

},

"anthropic.claude-3-sonnet-20240229-v1:0": {

"input": 0.003,

"output": 0.015

},

"anthropic.claude-3-opus-20240229-v1:0": {

"input": 0.015,

"output": 0.075

},

"anthropic.claude-instant-v1": {

"input": 0.0008,

"output": 0.0024

},

"anthropic.claude-v2": {

"input": 0.008,

"output": 0.024

},

"anthropic.claude-v2:1": {

"input": 0.008,

"output": 0.024

},

"mistral.mistral-7b-instruct-v0:2": {

"input": 0.00015,

"output": 0.0002

},

"mistral.mistral-large-2402-v1:0": {

"input": 0.008,

"output": 0.024

},

"mistral.mixtral-8x7b-instruct-v0:1": {

"input": 0.00045,

"output": 0.0007

}

}

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

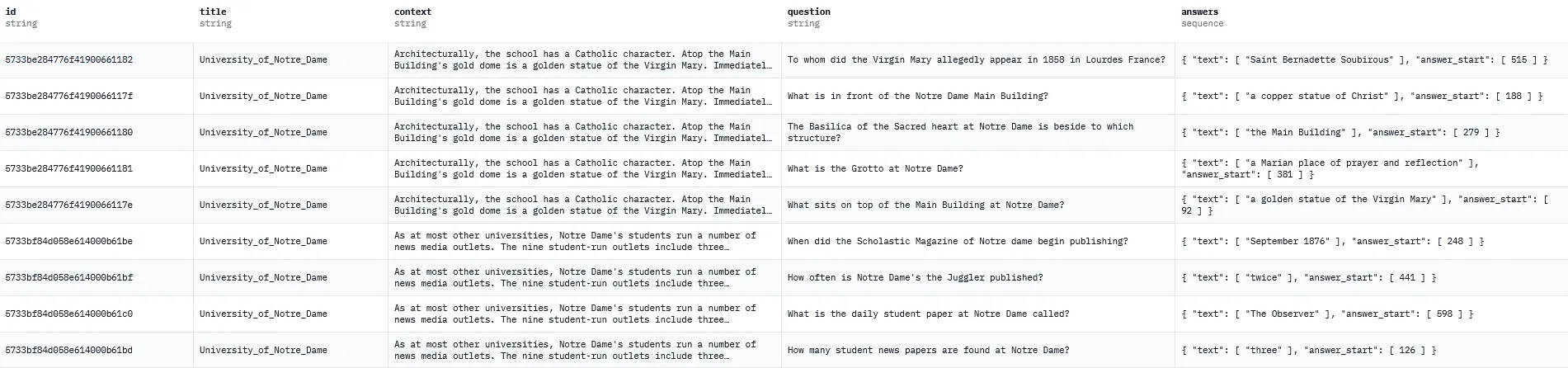

# Load a small sample of the SQuAD dataset

# https://huggingface.co/datasets/rajpurkar/squad

# https://huggingface.co/docs/transformers/en/tasks/question_answering#load-squad-dataset

squad = load_dataset("squad", split="train[:10]")

# and split it up

squad = squad.train_test_split(test_size=0.2, seed=42)

def test_llm_cascade(llmc: Any, dataset: List[dict]):

"""

Invokes the LLM cascade against a collection of context/question pairs

and returns the final answer and a cost analysis.

"""

for sample in dataset:

response = llmc.invoke({'context': sample['context'], 'question': sample['question']})

print(json.dumps(response, indent=4))

cost, best_cost = compute_cost(response, models)

cost_delta = (cost - best_cost) * 100 / best_cost

print(f"Cost: ${cost} ({cost_delta:+.2f}%)")

1

2

3

4

5

6

7

8

9

10

11

12

13

14

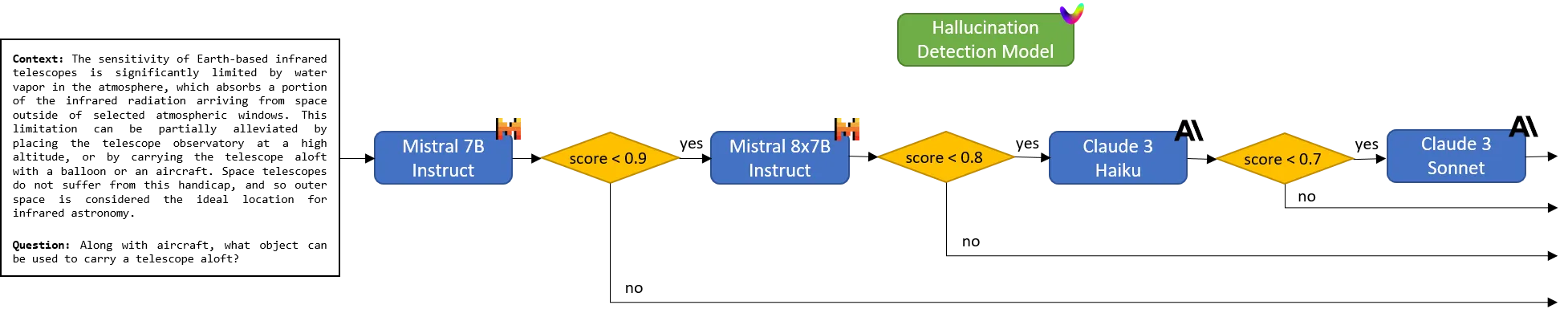

# LLM cascade configuration

models = [

"mistral.mistral-7b-instruct-v0:2",

"mistral.mixtral-8x7b-instruct-v0:1",

"anthropic.claude-3-haiku-20240307-v1:0",

"anthropic.claude-3-sonnet-20240229-v1:0"

]

thresholds = [0.9, 0.8, 0.7, 0.0]

# Create LLM cascade

llmc_lc = llm_cascade(models, thresholds)

# and test it

test_llm_cascade(llmc_lc, squad['train'])0) is not actually used. Just think of it as a gentle reminder that we'll accept everything coming from the best (last) model if the LLM cascade execution ever gets there.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

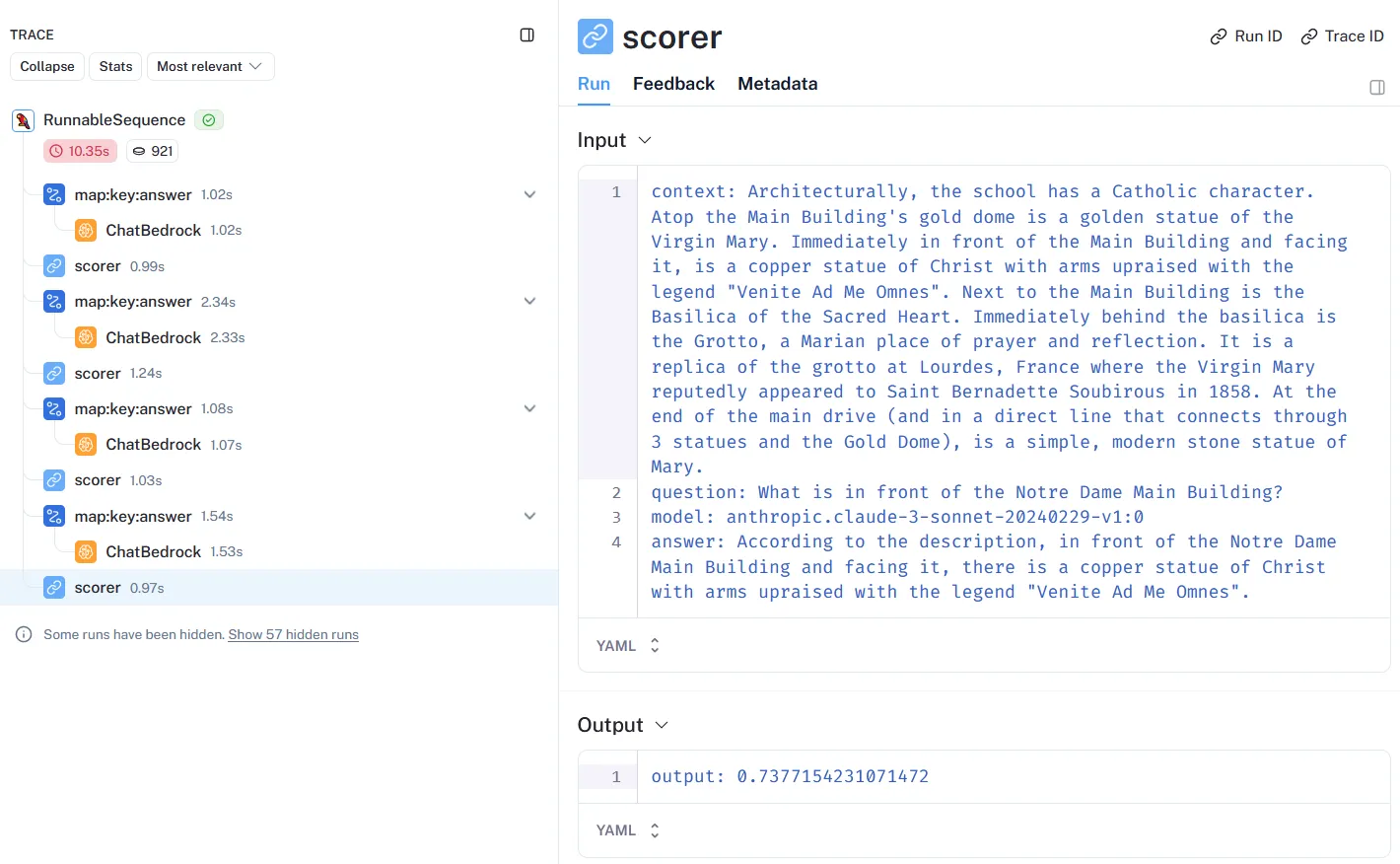

{

"context": "Architecturally, the school has a Catholic character. Atop the Main Building's gold dome is a golden statue of the Virgin Mary. Immediately in front of the Main Building and facing it, is a copper statue of Christ with arms upraised with the legend \"Venite Ad Me Omnes\". Next to the Main Building is the Basilica of the Sacred Heart. Immediately behind the basilica is the Grotto, a Marian place of prayer and reflection. It is a replica of the grotto at Lourdes, France where the Virgin Mary reputedly appeared to Saint Bernadette Soubirous in 1858. At the end of the main drive (and in a direct line that connects through 3 statues and the Gold Dome), is a simple, modern stone statue of Mary.",

"question": "To whom did the Virgin Mary allegedly appear in 1858 in Lourdes France?",

"model": "mistral.mixtral-8x7b-instruct-v0:1",

"answer": " The Virgin Mary, according to the Catholic faith, allegedly appeared to Saint Bernadette Soubirous in 1858 in Lourdes, France. This is commemorated in the Grotto at the school you described, which is a replica of the grotto at Lourdes."

}

Cost: $0.0001677 (-88.50%)

{

"context": "As at most other universities, Notre Dame's students run a number of news media outlets. The nine student-run outlets include three newspapers, both a radio and television station, and several magazines and journals. Begun as a one-page journal in September 1876, the Scholastic magazine is issued twice monthly and claims to be the oldest continuous collegiate publication in the United States. The other magazine, The Juggler, is released twice a year and focuses on student literature and artwork. The Dome yearbook is published annually. The newspapers have varying publication interests, with The Observer published daily and mainly reporting university and other news, and staffed by students from both Notre Dame and Saint Mary's College. Unlike Scholastic and The Dome, The Observer is an independent publication and does not have a faculty advisor or any editorial oversight from the University. In 1987, when some students believed that The Observer began to show a conservative bias, a liberal newspaper, Common Sense was published. Likewise, in 2003, when other students believed that the paper showed a liberal bias, the conservative paper Irish Rover went into production. Neither paper is published as often as The Observer; however, all three are distributed to all students. Finally, in Spring 2008 an undergraduate journal for political science research, Beyond Politics, made its debut.",

"question": "What is the daily student paper at Notre Dame called?",

"model": "mistral.mistral-7b-instruct-v0:2",

"answer": " The daily student paper at Notre Dame is called \"The Observer.\" It is an independent publication and does not have any editorial oversight from the University."

}

Cost: $6.259999999999999e-05 (-96.30%)

{

"context": "Architecturally, the school has a Catholic character. Atop the Main Building's gold dome is a golden statue of the Virgin Mary. Immediately in front of the Main Building and facing it, is a copper statue of Christ with arms upraised with the legend \"Venite Ad Me Omnes\". Next to the Main Building is the Basilica of the Sacred Heart. Immediately behind the basilica is the Grotto, a Marian place of prayer and reflection. It is a replica of the grotto at Lourdes, France where the Virgin Mary reputedly appeared to Saint Bernadette Soubirous in 1858. At the end of the main drive (and in a direct line that connects through 3 statues and the Gold Dome), is a simple, modern stone statue of Mary.",

"question": "What is the Grotto at Notre Dame?",

"model": "anthropic.claude-3-haiku-20240307-v1:0",

"answer": "Based on the description provided, the Grotto at Notre Dame is a replica of the grotto at Lourdes, France where the Virgin Mary is said to have appeared to Saint Bernadette Soubirous in 1858.\n\nThe key details are:\n\n1. Immediately behind the Basilica of the Sacred Heart is the Grotto.\n2. The Grotto is described as \"a Marian place of prayer and reflection.\"\n3. It is specifically noted as \"a replica of the grotto at Lourdes, France where the Virgin Mary reputedly appeared to Saint Bernadette Soubirous in 1858.\"\n\nSo the Grotto at Notre Dame is a recreated version of the famous Marian shrine at Lourdes, France, where the Virgin Mary is believed to have appeared to Saint Bernadette. It serves as a place of prayer and reflection for the Notre Dame community."

}

Cost: $0.0005632 (-83.42%)

{

"context": "Architecturally, the school has a Catholic character. Atop the Main Building's gold dome is a golden statue of the Virgin Mary. Immediately in front of the Main Building and facing it, is a copper statue of Christ with arms upraised with the legend \"Venite Ad Me Omnes\". Next to the Main Building is the Basilica of the Sacred Heart. Immediately behind the basilica is the Grotto, a Marian place of prayer and reflection. It is a replica of the grotto at Lourdes, France where the Virgin Mary reputedly appeared to Saint Bernadette Soubirous in 1858. At the end of the main drive (and in a direct line that connects through 3 statues and the Gold Dome), is a simple, modern stone statue of Mary.",

"question": "The Basilica of the Sacred heart at Notre Dame is beside to which structure?",

"model": "anthropic.claude-3-haiku-20240307-v1:0",

"answer": "According to the description, the Basilica of the Sacred Heart is located next to the Main Building at the University of Notre Dame.\n\nThe key details are:\n\n1. \"Next to the Main Building is the Basilica of the Sacred Heart.\"\n2. The description mentions the \"Main Building's gold dome\" and other features in front of the Main Building.\n\nSo the Basilica of the Sacred Heart is situated adjacent to the Main Building on the Notre Dame campus."

}

Cost: $0.00039755 (-82.02%)

{

"context": "Architecturally, the school has a Catholic character. Atop the Main Building's gold dome is a golden statue of the Virgin Mary. Immediately in front of the Main Building and facing it, is a copper statue of Christ with arms upraised with the legend \"Venite Ad Me Omnes\". Next to the Main Building is the Basilica of the Sacred Heart. Immediately behind the basilica is the Grotto, a Marian place of prayer and reflection. It is a replica of the grotto at Lourdes, France where the Virgin Mary reputedly appeared to Saint Bernadette Soubirous in 1858. At the end of the main drive (and in a direct line that connects through 3 statues and the Gold Dome), is a simple, modern stone statue of Mary.",

"question": "What sits on top of the Main Building at Notre Dame?",

"model": "anthropic.claude-3-haiku-20240307-v1:0",

"answer": "According to the description, atop the Main Building's gold dome is a golden statue of the Virgin Mary."

}

Cost: $0.00021185 (-77.29%)

{

"context": "As at most other universities, Notre Dame's students run a number of news media outlets. The nine student-run outlets include three newspapers, both a radio and television station, and several magazines and journals. Begun as a one-page journal in September 1876, the Scholastic magazine is issued twice monthly and claims to be the oldest continuous collegiate publication in the United States. The other magazine, The Juggler, is released twice a year and focuses on student literature and artwork. The Dome yearbook is published annually. The newspapers have varying publication interests, with The Observer published daily and mainly reporting university and other news, and staffed by students from both Notre Dame and Saint Mary's College. Unlike Scholastic and The Dome, The Observer is an independent publication and does not have a faculty advisor or any editorial oversight from the University. In 1987, when some students believed that The Observer began to show a conservative bias, a liberal newspaper, Common Sense was published. Likewise, in 2003, when other students believed that the paper showed a liberal bias, the conservative paper Irish Rover went into production. Neither paper is published as often as The Observer; however, all three are distributed to all students. Finally, in Spring 2008 an undergraduate journal for political science research, Beyond Politics, made its debut.",

"question": "In what year did the student paper Common Sense begin publication at Notre Dame?",

"model": "mistral.mixtral-8x7b-instruct-v0:1",

"answer": " 1987\n\nIn 1987, when some students believed that The Observer began to show a conservative bias, a liberal newspaper, Common Sense was published at Notre Dame."

}

Cost: $0.0002577 (-84.82%)

{

"context": "Architecturally, the school has a Catholic character. Atop the Main Building's gold dome is a golden statue of the Virgin Mary. Immediately in front of the Main Building and facing it, is a copper statue of Christ with arms upraised with the legend \"Venite Ad Me Omnes\". Next to the Main Building is the Basilica of the Sacred Heart. Immediately behind the basilica is the Grotto, a Marian place of prayer and reflection. It is a replica of the grotto at Lourdes, France where the Virgin Mary reputedly appeared to Saint Bernadette Soubirous in 1858. At the end of the main drive (and in a direct line that connects through 3 statues and the Gold Dome), is a simple, modern stone statue of Mary.",

"question": "What is in front of the Notre Dame Main Building?",

"model": "anthropic.claude-3-sonnet-20240229-v1:0",

"answer": "According to the description, in front of the Notre Dame Main Building and facing it, there is a copper statue of Christ with arms upraised with the legend \"Venite Ad Me Omnes\"."

}

Cost: $0.0014707 (+20.75%)

{

"context": "As at most other universities, Notre Dame's students run a number of news media outlets. The nine student-run outlets include three newspapers, both a radio and television station, and several magazines and journals. Begun as a one-page journal in September 1876, the Scholastic magazine is issued twice monthly and claims to be the oldest continuous collegiate publication in the United States. The other magazine, The Juggler, is released twice a year and focuses on student literature and artwork. The Dome yearbook is published annually. The newspapers have varying publication interests, with The Observer published daily and mainly reporting university and other news, and staffed by students from both Notre Dame and Saint Mary's College. Unlike Scholastic and The Dome, The Observer is an independent publication and does not have a faculty advisor or any editorial oversight from the University. In 1987, when some students believed that The Observer began to show a conservative bias, a liberal newspaper, Common Sense was published. Likewise, in 2003, when other students believed that the paper showed a liberal bias, the conservative paper Irish Rover went into production. Neither paper is published as often as The Observer; however, all three are distributed to all students. Finally, in Spring 2008 an undergraduate journal for political science research, Beyond Politics, made its debut.",

"question": "How many student news papers are found at Notre Dame?",

"model": "mistral.mistral-7b-instruct-v0:2",

"answer": " There are three student-run newspapers at Notre Dame: The Observer, Common Sense, and Irish Rover."

}

Cost: $5.9399999999999994e-05 (-95.91%)1

2

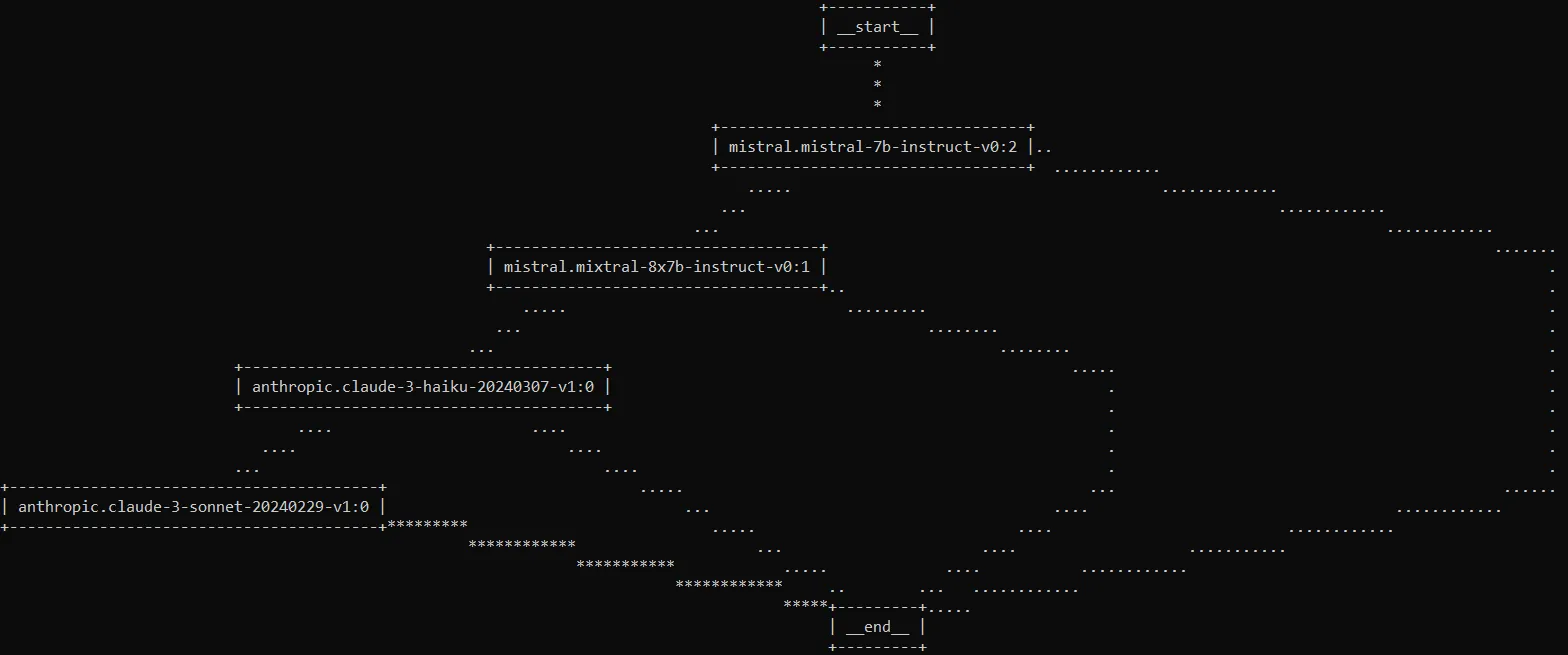

3

4

llmc_lg = llm_cascade_lg(models, thresholds)

# >>> ASCII

llmc_lg.get_graph().print_ascii()

1

2

3

4

5

6

7

8

9

from IPython.display import display, HTML

import base64

def display_image(image_bytes: bytes, width=300):

decoded_img_bytes = base64.b64encode(image_bytes).decode('utf-8')

html = f'<img src="data:image/png;base64,{decoded_img_bytes}" style="width: {width}px;" />'

display(HTML(html))

display_image(llmc_lg.get_graph().draw_png())

1

2

3

4

export LANGCHAIN_TRACING_V2=true

export LANGCHAIN_ENDPOINT=https://api.smith.langchain.com

export LANGCHAIN_API_KEY=your-api-key

export LANGCHAIN_PROJECT=frugal-bedrock

"I think frugality drives innovation, just like other constraints do. One of the only ways to get out of a tight box is to invent your way out." ―Jeff Bezos

- (Chen, Zaharia & Zou, 2023) FrugalGPT: How to Use Large Language Models While Reducing Cost and Improving Performance

- (Honovich et al., 2022) TRUE: Re-evaluating Factual Consistency Evaluation

- stanford-futuredata/FrugalGPT - offers a collection of techniques for building LLM applications with budget constraints

- MurongYue/LLM_MoT_cascade - provides prompts, LLM results and code implementation for Yue et al. (2023)

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.