Use Anthropic Claude 3 models to build generative AI applications with Go

Take Claude Sonnet and Claude Haiku for a spin!

- Claude 3 Haiku is a compact and fast model that provides near-instant responsiveness

- Claude 3 Sonnet provides a balance between skills and speed

- Claude 3 Opus is for highly complex tasks when you that need high intelligence and performance

1

2

3

4

git clone https://github.com/abhirockzz/claude3-bedrock-go

cd claude3-bedrock-go

go run basic/main.go1

2

3

4

5

6

request payload:

{"anthropic_version":"bedrock-2023-05-31","max_tokens":1024,"messages":[{"role":"user","content":[{"type":"text","text":"Hello, what's your name?"}]}]}

response payload:

{"id":"msg_015e3SJ99WF6p1yhGTkc4HbK","type":"message","role":"assistant","content":[{"type":"text","text":"My name is Claude. It's nice to meet you!"}],"model":"claude-3-sonnet-28k-20240229","stop_reason":"end_turn","stop_sequence":null,"usage":{"input_tokens":14,"output_tokens":15}}

response string:

My name is Claude. It's nice to meet you!struct (Claude3Request):1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

msg := "Hello, what's your name?"

payload := Claude3Request{

AnthropicVersion: "bedrock-2023-05-31",

MaxTokens: 1024,

Messages: []Message{

{

Role: "user",

Content: []Content{

{

Type: "text",

Text: msg,

},

},

},

},

}

payloadBytes, err := json.Marshal(payload)Claude3Response) and the text response is extracted from it.1

2

3

4

5

6

7

8

9

10

11

//....

output, err := brc.InvokeModel(context.Background(), &bedrockruntime.InvokeModelInput{

Body: payloadBytes,

ModelId: aws.String(modelID),

ContentType: aws.String("application/json"),

})

var resp Claude3Response

err = json.Unmarshal(output.Body, &resp)

log.Println("response string:\n", resp.ResponseContent[0].Text)1

go run chat-streaming/main.go

Invoke).1

2

3

4

5

6

7

8

9

10

output, err := brc.InvokeModelWithResponseStream(context.Background(), &bedrockruntime.InvokeModelWithResponseStreamInput{

Body: payloadBytes,

ModelId: aws.String(modelID),

ContentType: aws.String("application/json"),

})

resp, err := processStreamingOutput(output, func(ctx context.Context, part []byte) error {

fmt.Print(string(part))

return nil

})1

2

3

4

5

resp, err := processStreamingOutput(output, func(ctx context.Context, part []byte) error {

fmt.Print(string(part))

return nil

})

//...processStreamingOutput function - you can check the code here. The important thing to understand is how the partial responses are collected together to produce the final output.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

func processStreamingOutput(output *bedrockruntime.InvokeModelWithResponseStreamOutput, handler StreamingOutputHandler) (Claude3Response, error) {

//...

for event := range output.GetStream().Events() {

switch v := event.(type) {

case *types.ResponseStreamMemberChunk:

var pr PartialResponse

err := json.NewDecoder(bytes.NewReader(v.Value.Bytes)).Decode(&pr)

if err != nil {

return resp, err

}

if pr.Type == partialResponseTypeContentBlockDelta {

handler(context.Background(), []byte(pr.Delta.Text))

combinedResult += pr.Delta.Text

} else if pr.Type == partialResponseTypeMessageStart {

resp.ID = pr.Message.ID

resp.Usage.InputTokens = pr.Message.Usage.InputTokens

} else if pr.Type == partialResponseTypeMessageDelta {

resp.StopReason = pr.Delta.StopReason

resp.Usage.OutputTokens = pr.Message.Usage.OutputTokens

}

//...

}

resp.ResponseContent[0].Text = combinedResult

return resp, nil

}

1

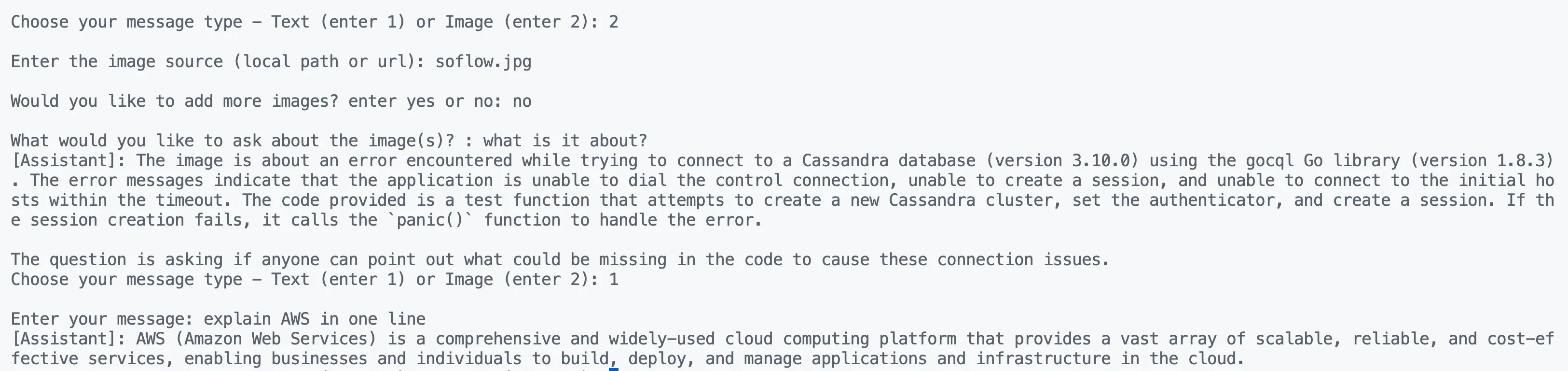

go run images/main.go1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

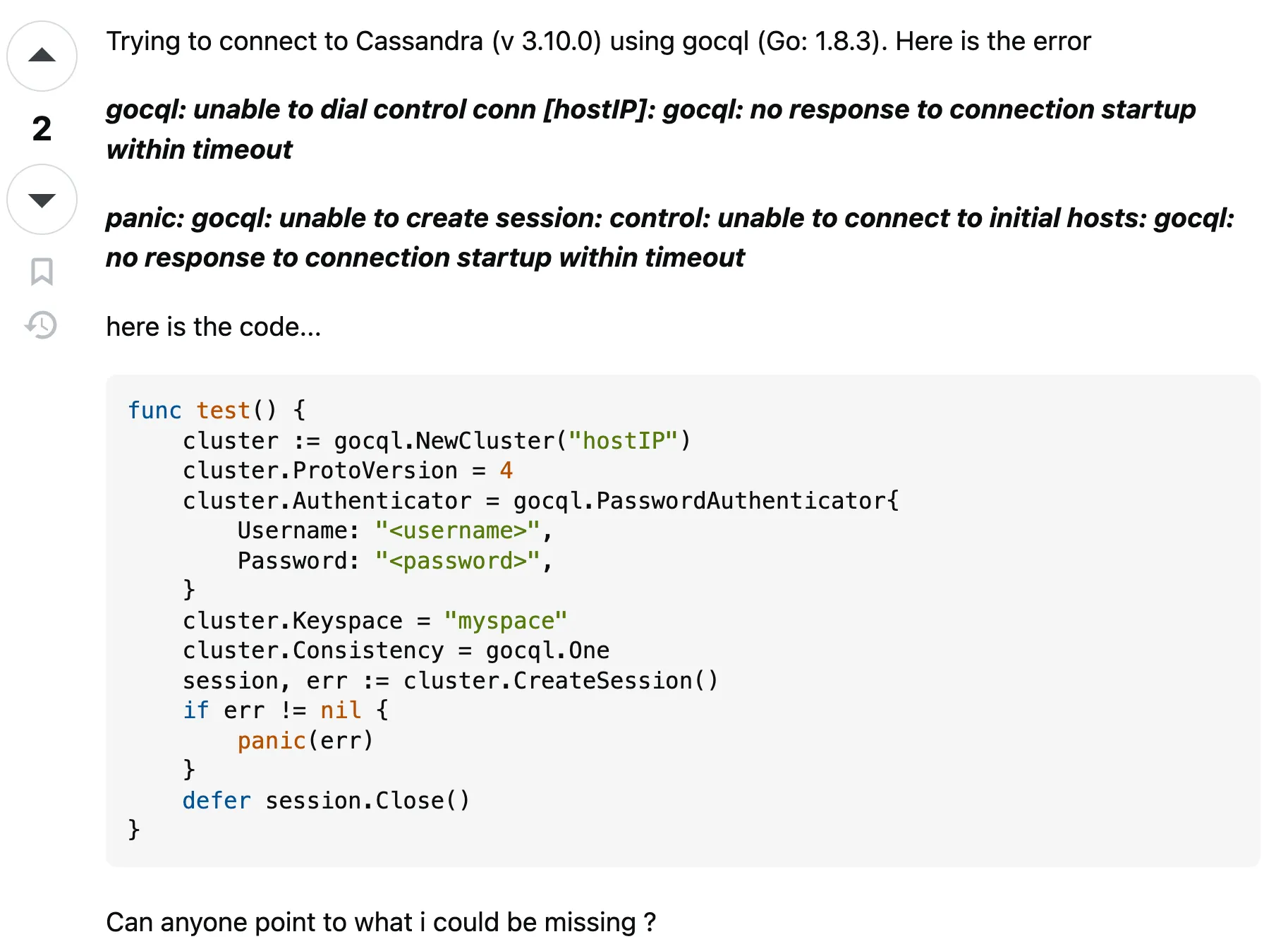

func test() {

cluster := gocql.NewCluster("hostIP")

cluster.ProtoVersion = 4

cluster.Authenticator = gocql.PasswordAuthenticator{

Username: "<username>",

Password: "<password>",

}

cluster.Keyspace = "myspace"

cluster.Consistency = gocql.One

session, err := cluster.CreateSession()

if err != nil {

panic(err)

}

defer session.Close()

}1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

Based on the error messages shown in the image, it seems that the issue is related to connecting to the Cassandra database using the gocql driver (Go: 1.8.3). The errors indicate that the application is unable to dial the control connection, create a session, or connect to the initial hosts within the timeout period.

A few things you could check to troubleshoot this issue:

1. Verify the connection details (host, port, username, password) are correct and match the Cassandra cluster configuration.

2. Ensure the Cassandra cluster is up and running and accessible from the application host.

3. Check the firewall settings to make sure the application host is able to connect to the Cassandra cluster on the required ports.

4. Inspect the Cassandra server logs for any errors or clues about the connection issue.

5. Try increasing the timeout values in the gocql configuration, as the current timeouts may be too short for the Cassandra cluster to respond.

6. Ensure the gocql version (1.8.3) is compatible with the Cassandra version (3.10.0) you are using.

7. Consider adding some error handling and retry logic in your code to gracefully handle connection failures and attempt to reconnect.

Without more context about your specific setup and environment, it's difficult to pinpoint the exact issue. However, the steps above should help you investigate the problem and find a solution.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

payload := Claude3Request{

AnthropicVersion: "bedrock-2023-05-31",

MaxTokens: 1024,

Messages: []Message{

{

Role: "user",

Content: []Content{

{

Type: "image",

Source: &Source{

Type: "base64",

MediaType: "image/jpeg",

Data: imageContents,

},

},

{

Type: "text",

Text: msg,

Source: nil,

},

},

},

},

}imageContents in Data attribute is the base64 encoded version of the image which is calculated like this:1

2

3

4

5

6

7

8

9

10

11

func readImageAsBase64(filePath string) (string, error) {

imageFile, err := os.ReadFile(filePath)

if err != nil {

return "", err

}

encodedString := base64.StdEncoding.EncodeToString(imageFile)

return encodedString, nil

}1

go run multi-modal-chat-streaming/main.go

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.