Use Anthropic Claude 3 models to build generative AI applications with Go

Take Claude Sonnet and Claude Haiku for a spin!

Abhishek Gupta

Amazon Employee

Published May 13, 2024

Last Modified May 28, 2024

Anthropic's Claude 3 is a family of AI models with different capabilities and cost for a variety of tasks:

- Claude 3 Haiku is a compact and fast model that provides near-instant responsiveness

- Claude 3 Sonnet provides a balance between skills and speed

- Claude 3 Opus is for highly complex tasks when you that need high intelligence and performance

Claude 3 models are multi-modal. This means that they can accept both text and images as inputs (although they can only output text). Let's learn how to use the Claude 3 models on Amazon Bedrock with Go.

Refer to Before You Begin section in this blog post to complete the prerequisites for running the examples. This includes installing Go, configuring Amazon Bedrock access and providing necessary IAM permissions.

Amazon Bedrock abstracts multiple models via a uniform set of APIs that exchange JSON payloads. Same applies for Claude 3.

Let's start off with a simple example using AWS SDK for Go (v2).

You can run it as such:

The response may (or may not) be slightly different in your case:

You can refer to the complete code here.

I will break it down to make this simpler for you:

We start by creating the JSON payload - it's modelled as a Go

struct (Claude3Request):InvokeModel is used to call the model. The JSON response is converted to a struct (

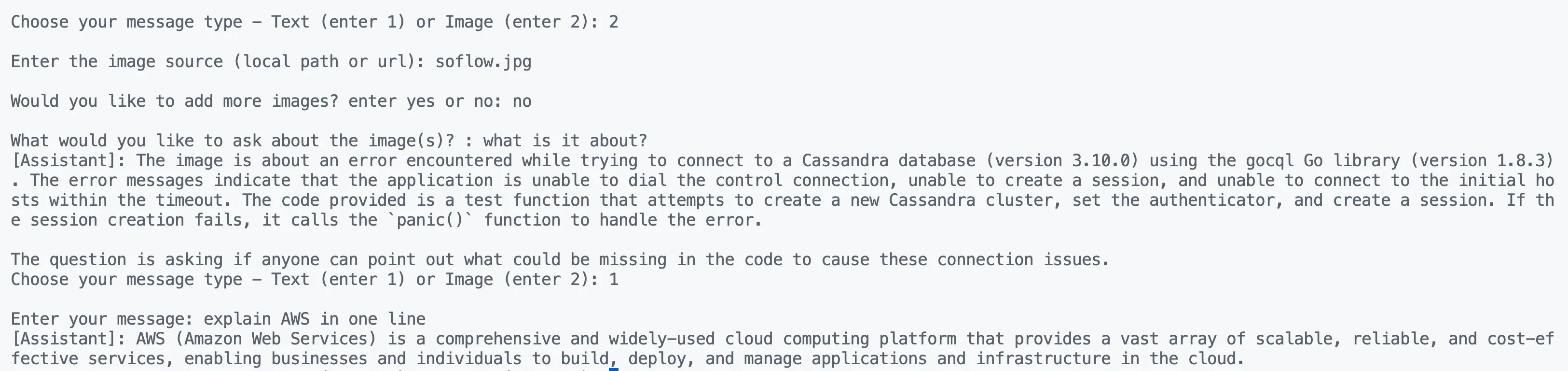

Claude3Response) and the text response is extracted from it.Now moving on to a common example which involves a conversational exchange. We will also add a streaming element to for better experience - the client application does not need to wait for the complete response to be generated for it start showing up in the conversation.

You can run it as such:

You can refer to the complete code here.

A streaming based implementation is a bit more involved. First off, we use InvokeModelWithResponseStream (instead of

Invoke).To process it's output, we use:

Here are a few bits from the

processStreamingOutput function - you can check the code here. The important thing to understand is how the partial responses are collected together to produce the final output.All the Claude3 models can accept images as inputs. Haiku is deemed to be good at OCR (optical character recognition), understanding images, etc. Let's make use of Claude Haiku the upcoming examples - no major changes required, except for the model ID.

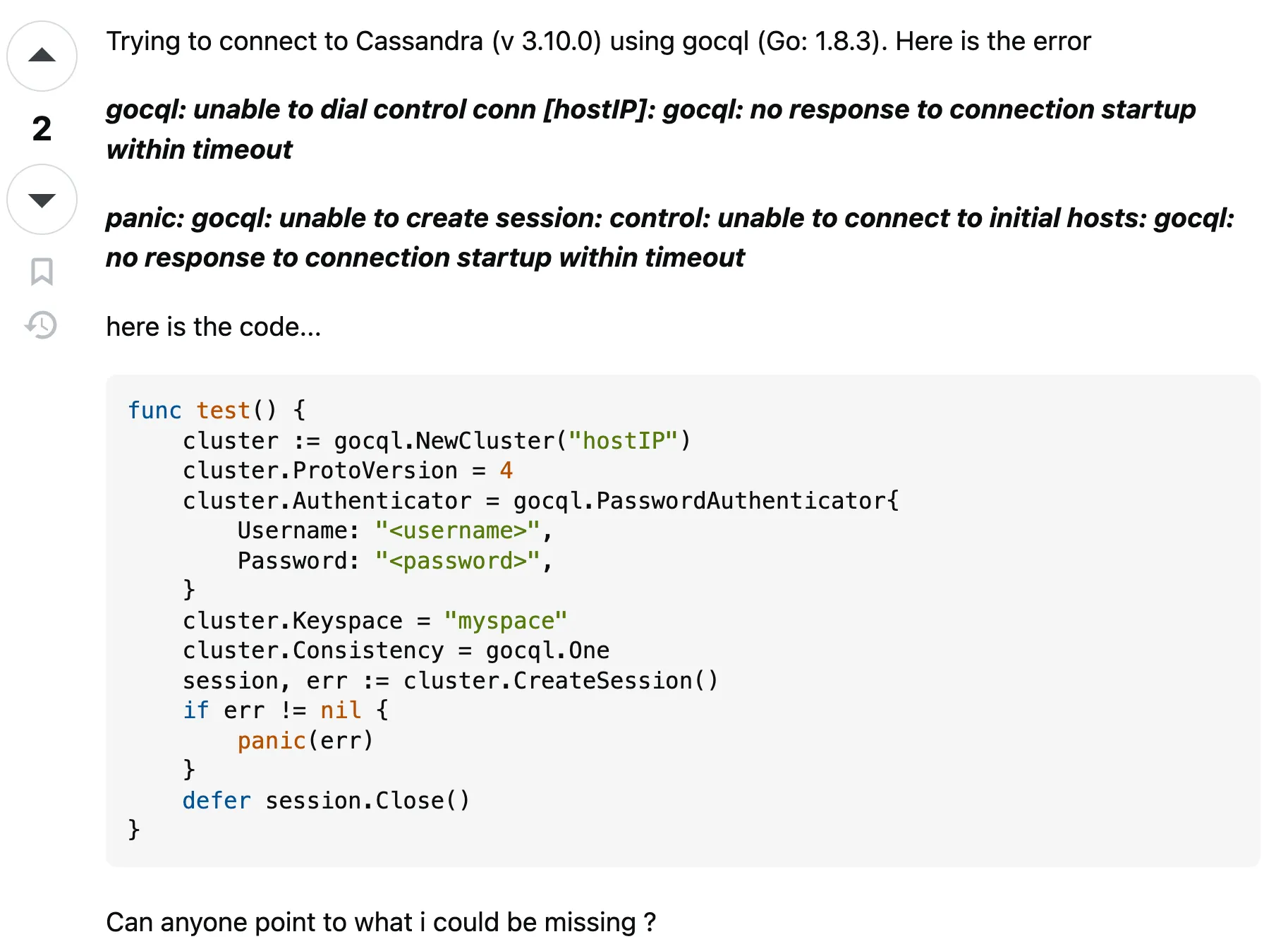

The example uses this image (actual StackOverflow question) along with this prompt "Transcribe the code in the question. Only output the code."

You can run it as such:

This is the response that Claude Haiku came up with. It was able to extract the entire code block from the image. Impressive!

I tried another one: "Can you suggest a solution to the question?"

And here is the response:

You can refer to the complete code here.

Here is what the JSON payload for the request:

The

imageContents in Data attribute is the base64 encoded version of the image which is calculated like this:You can try a different image (for example this one), and check if it's able to calculate the cost of all menu items.

Image and text data are not exclusive. The message structure (JSON payload) is flexible enough to support both.

You can refer to the complete code here.

Here is an example in which you can mix and match text and image based messages. You can run it as such:

Here is the response (I used the same StackOverflow image as above):

You don't have to depend on Python to build generative AI applications. Thanks to AWS SDK support, you can use the programming language of your choice (including Go!) to integrate with Amazon Bedrock.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.