Scrape All Things: AI-powered web scraping with ScrapeGraphAI 🕷️ and Amazon Bedrock ⛰️

Learn how to extract information from documents and websites using natural language prompts.

"In the beginning was a graph..." ― Greg Egan, Schild's Ladder

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

<catalog>

<book id="bk101">

<author>Gambardella, Matthew</author>

<title>XML Developer's Guide</title>

<genre>Computer</genre>

<price>44.95</price>

<publish_date>2000-10-01</publish_date>

<description>An in-depth look at creating applications

with XML.</description>

</book>

<book id="bk102">

<author>Ralls, Kim</author>

<title>Midnight Rain</title>

<genre>Fantasy</genre>

<price>5.95</price>

<publish_date>2000-12-16</publish_date>

<description>A former architect battles corporate zombies,

an evil sorceress, and her own childhood to become queen

of the world.</description>

</book>

<book id="bk103">

<author>Corets, Eva</author>

<title>Maeve Ascendant</title>

<genre>Fantasy</genre>

<price>5.95</price>

<publish_date>2000-11-17</publish_date>

<description>After the collapse of a nanotechnology

society in England, the young survivors lay the

foundation for a new society.</description>

</book>

<book id="bk104">

<author>Corets, Eva</author>

<title>Oberon's Legacy</title>

<genre>Fantasy</genre>

<price>5.95</price>

<publish_date>2001-03-10</publish_date>

<description>In post-apocalypse England, the mysterious

agent known only as Oberon helps to create a new life

for the inhabitants of London. Sequel to Maeve

Ascendant.</description>

</book>

<book id="bk105">

<author>Corets, Eva</author>

<title>The Sundered Grail</title>

<genre>Fantasy</genre>

<price>5.95</price>

<publish_date>2001-09-10</publish_date>

<description>The two daughters of Maeve, half-sisters,

battle one another for control of England. Sequel to

Oberon's Legacy.</description>

</book>

<book id="bk106">

<author>Randall, Cynthia</author>

<title>Lover Birds</title>

<genre>Romance</genre>

<price>4.95</price>

<publish_date>2000-09-02</publish_date>

<description>When Carla meets Paul at an ornithology

conference, tempers fly as feathers get ruffled.</description>

</book>

<book id="bk107">

<author>Thurman, Paula</author>

<title>Splish Splash</title>

<genre>Romance</genre>

<price>4.95</price>

<publish_date>2000-11-02</publish_date>

<description>A deep sea diver finds true love twenty

thousand leagues beneath the sea.</description>

</book>

<book id="bk108">

<author>Knorr, Stefan</author>

<title>Creepy Crawlies</title>

<genre>Horror</genre>

<price>4.95</price>

<publish_date>2000-12-06</publish_date>

<description>An anthology of horror stories about roaches,

centipedes, scorpions and other insects.</description>

</book>

<book id="bk109">

<author>Kress, Peter</author>

<title>Paradox Lost</title>

<genre>Science Fiction</genre>

<price>6.95</price>

<publish_date>2000-11-02</publish_date>

<description>After an inadvertant trip through a Heisenberg

Uncertainty Device, James Salway discovers the problems

of being quantum.</description>

</book>

<book id="bk110">

<author>O'Brien, Tim</author>

<title>Microsoft .NET: The Programming Bible</title>

<genre>Computer</genre>

<price>36.95</price>

<publish_date>2000-12-09</publish_date>

<description>Microsoft's .NET initiative is explored in

detail in this deep programmer's reference.</description>

</book>

<book id="bk111">

<author>O'Brien, Tim</author>

<title>MSXML3: A Comprehensive Guide</title>

<genre>Computer</genre>

<price>36.95</price>

<publish_date>2000-12-01</publish_date>

<description>The Microsoft MSXML3 parser is covered in

detail, with attention to XML DOM interfaces, XSLT processing,

SAX and more.</description>

</book>

<book id="bk112">

<author>Galos, Mike</author>

<title>Visual Studio 7: A Comprehensive Guide</title>

<genre>Computer</genre>

<price>49.95</price>

<publish_date>2001-04-16</publish_date>

<description>Microsoft Visual Studio 7 is explored in depth,

looking at how Visual Basic, Visual C++, C#, and ASP+ are

integrated into a comprehensive development

environment.</description>

</book>

</catalog>1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

"""

Old XML scraper 👴🏻

"""

import json

from lxml import etree

# Get catalog (root element)

catalog = etree.parse('books.xml').getroot()

# Find all books

books = catalog.findall('book')

# Get author, title and genre for each book

result = {'books': []}

for book in books:

author, title, genre = book.find('author'), \

book.find('title'), \

book.find('genre')

result['books'].append({

'author': author.text,

'title': title.text,

'genre': genre.text

})

# Print the final result

print(json.dumps(result, indent=4))

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

{

"books": [

{

"author": "Gambardella, Matthew",

"title": "XML Developer's Guide",

"genre": "Computer"

},

{

"author": "Ralls, Kim",

"title": "Midnight Rain",

"genre": "Fantasy"

},

{

"author": "Corets, Eva",

"title": "Maeve Ascendant",

"genre": "Fantasy"

},

{

"author": "Corets, Eva",

"title": "Oberon's Legacy",

"genre": "Fantasy"

},

{

"author": "Corets, Eva",

"title": "The Sundered Grail",

"genre": "Fantasy"

},

{

"author": "Randall, Cynthia",

"title": "Lover Birds",

"genre": "Romance"

},

{

"author": "Thurman, Paula",

"title": "Splish Splash",

"genre": "Romance"

},

{

"author": "Knorr, Stefan",

"title": "Creepy Crawlies",

"genre": "Horror"

},

{

"author": "Kress, Peter",

"title": "Paradox Lost",

"genre": "Science Fiction"

},

{

"author": "O'Brien, Tim",

"title": "Microsoft .NET: The Programming Bible",

"genre": "Computer"

},

{

"author": "O'Brien, Tim",

"title": "MSXML3: A Comprehensive Guide",

"genre": "Computer"

},

{

"author": "Galos, Mike",

"title": "Visual Studio 7: A Comprehensive Guide",

"genre": "Computer"

}

]

}book element 📗, which contains children elements corresponding to the author, title and genre of the book, and that all books are themselves children nodes of the root node, which is called catalog 🗂️.Not-So-Fun Fact: in a past life, when I worked as a software tester, I used to write scripts like this all the time using frameworks like BeautifulSoup (BS will make an appearance later in this article, just keep reading) and Selenium. Glad those days are over! 🤭

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

"""

New XML scraper 🕷️⛰️

"""

import json

from scrapegraphai.graphs import XMLScraperGraph

# Read XML file

with open("books.xml", 'r', encoding="utf-8") as file:

source = file.read()

# Define the graph configuration

graph_config = {

"llm": {"model": "bedrock/anthropic.claude-3-sonnet-20240229-v1:0"},

"embeddings": {"model": "bedrock/cohere.embed-multilingual-v3"}

}

# Create a graph instance and run it

graph = XMLScraperGraph(

prompt="List me all the authors, title and genres of the books. Skip the preamble.",

source=source,

config=graph_config

)

result = graph.run()

# Print the final result

print(json.dumps(result, indent=4))

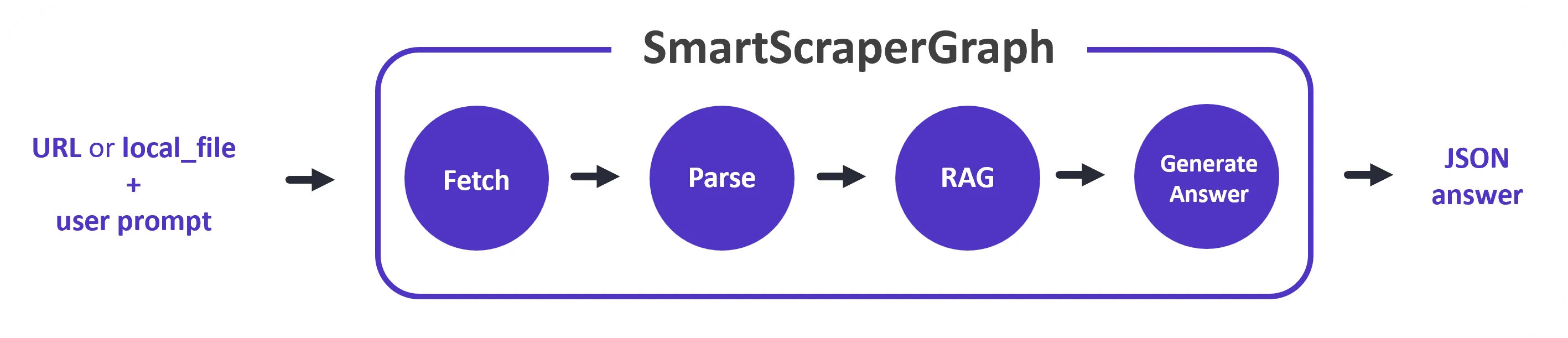

Skip the preamble instruction to ensure that Claude goes straight to the point and generates the right output.Input → 🟢 → 🟡 → 🟣 → Output.XMLScraperGraph, which we used in the example above, or the SmartScraperGraph (pictured below), and the possibility to create your own custom graphs.

AWS_*) but we can create a custom client and pass it along to the graph:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

"""

SmartScraperGraph with custom AWS client ☁️

"""

import os

import json

import boto3

from scrapegraphai.graphs import SmartScraperGraph

# Initialize session

session = boto3.Session(

aws_access_key_id=os.environ.get("AWS_ACCESS_KEY_ID"),

aws_secret_access_key=os.environ.get("AWS_SECRET_ACCESS_KEY"),

aws_session_token=os.environ.get("AWS_SESSION_TOKEN"),

region_name=os.environ.get("AWS_DEFAULT_REGION")

)

# Initialize client

client = session.client("bedrock-runtime")

# Define graph configuration

config = {

"llm": {

"client": client,

"model": "bedrock/anthropic.claude-3-sonnet-20240229-v1:0",

"temperature": 0.0

},

"embeddings": {

"client": client,

"model": "bedrock/cohere.embed-multilingual-v3",

},

}

# Create graph instance and run it

graph = SmartScraperGraph(

prompt="List me all the articles.",

source="https://perinim.github.io/projects",

config=config

)

result = graph.run()

# Print the final result

print(json.dumps(result, indent=4))

1

2

3

4

5

6

7

8

{

"articles": [

"Rotary Pendulum RL",

"DQN Implementation from scratch",

"Multi Agents HAED",

"Wireless ESC for Modular Drones"

]

}👨💻 All code and documentation is available on GitHub.

1

2

git clone https://github.com/JGalego/ScrapeGraphAI-Bedrock

cd ScrapeGraphAI-Bedrock1

2

3

4

5

6

7

8

9

10

# Install Python packages

pip install -r requirements.txt

# Install browsers

# https://playwright.dev/python/docs/browsers#install-browsers

playwright install

# Install system dependencies

# https://playwright.dev/python/docs/browsers#install-system-dependencies

playwright install-deps1

2

3

4

5

6

7

8

# Option 1: (recommended) AWS CLI

aws configure

# Option 2: environment variables

export AWS_ACCESS_KEY_ID=...

export AWS_SECRET_ACCESS_KEY=...

export AWS_SESSION_TOKEN=...

export AWS_DEFAULT_REGION=...1

2

3

4

5

# Run the full application

streamlit run pages/scrapegraphai_bedrock.py

# or just a single demo

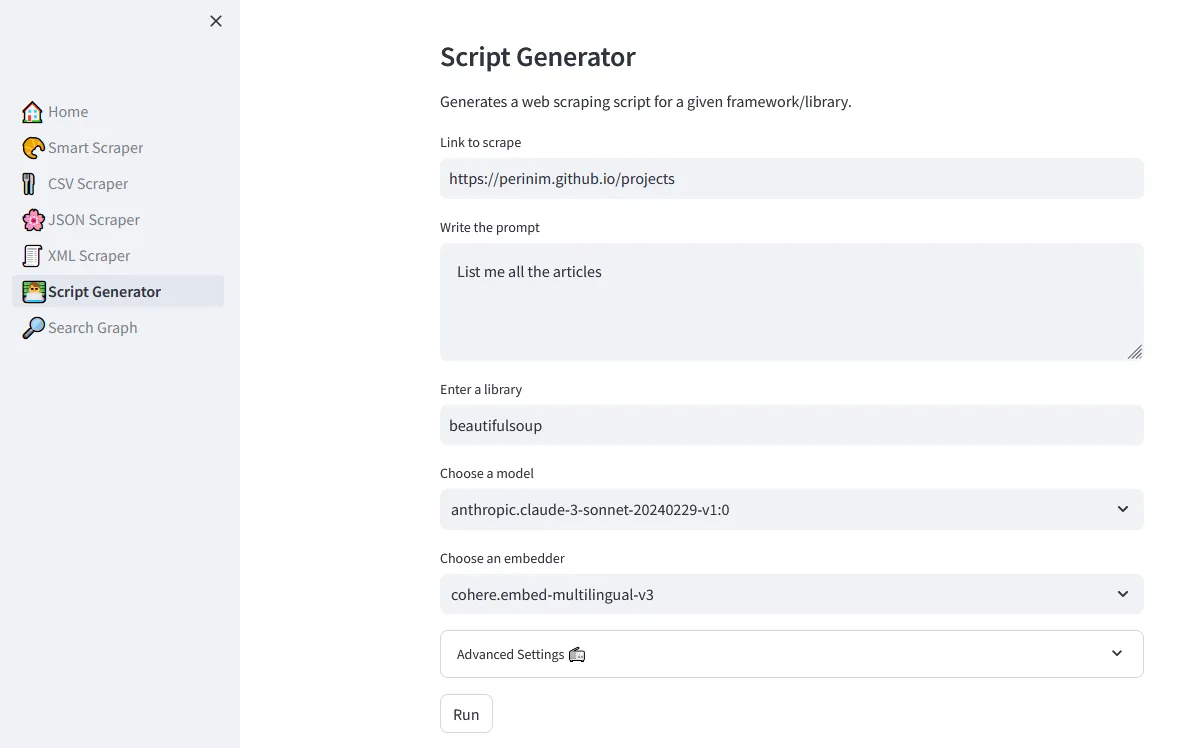

streamlit run pages/smart_scraper.pyScriptCreatorGraph, to generate an old-style scraping pipeline powered by the beautifulsoup framework or any other scraping library.

1

2

3

4

5

6

7

8

9

10

11

12

from bs4 import BeautifulSoup

import requests

url = "https://perinim.github.io/projects"

response = requests.get(url)

soup = BeautifulSoup(response.content, "html.parser")

articles = soup.find_all("article")

for article in articles:

print(article.get_text())👷♂️ Want to contribute? Feel free to open an issue or a pull request!

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.