Scrape All Things: AI-powered web scraping with ScrapeGraphAI 🕷️ and Amazon Bedrock ⛰️

Learn how to extract information from documents and websites using natural language prompts.

"In the beginning was a graph..." ― Greg Egan, Schild's Ladder

book element 📗, which contains children elements corresponding to the author, title and genre of the book, and that all books are themselves children nodes of the root node, which is called catalog 🗂️.Not-So-Fun Fact: in a past life, when I worked as a software tester, I used to write scripts like this all the time using frameworks like BeautifulSoup (BS will make an appearance later in this article, just keep reading) and Selenium. Glad those days are over! 🤭

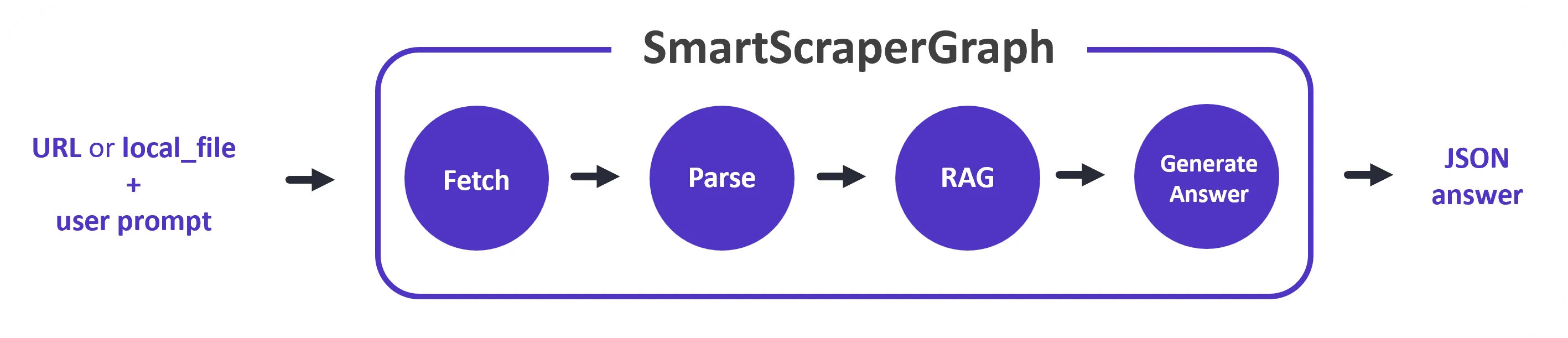

Skip the preamble instruction to ensure that Claude goes straight to the point and generates the right output.Input → 🟢 → 🟡 → 🟣 → Output.XMLScraperGraph, which we used in the example above, or the SmartScraperGraph (pictured below), and the possibility to create your own custom graphs.

AWS_*) but we can create a custom client and pass it along to the graph:👨💻 All code and documentation is available on GitHub.

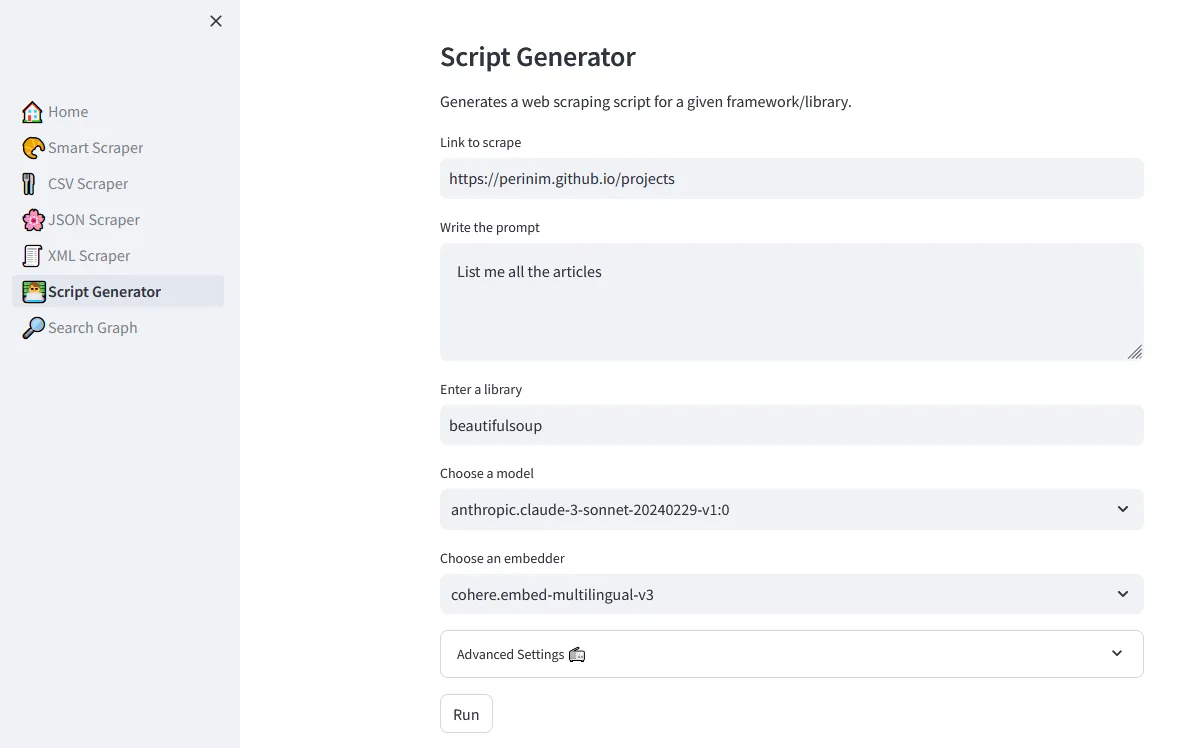

ScriptCreatorGraph, to generate an old-style scraping pipeline powered by the beautifulsoup framework or any other scraping library.

👷♂️ Want to contribute? Feel free to open an issue or a pull request!

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.