Can Claude Play Pokemon? Kind of

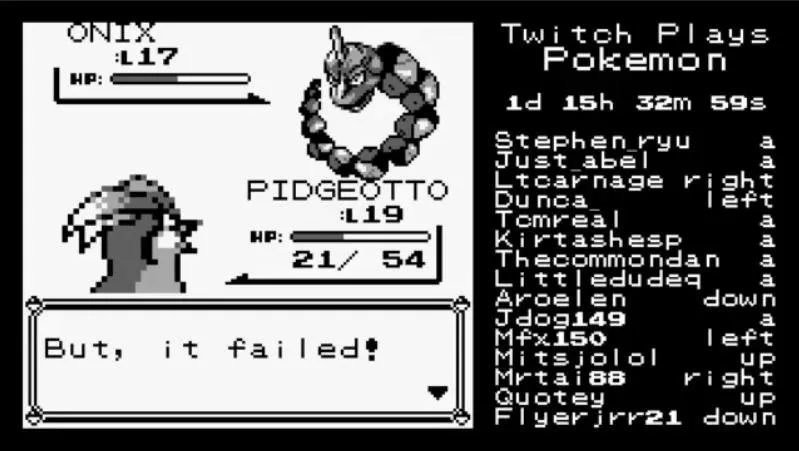

LLMs still struggle with spatial reasoning

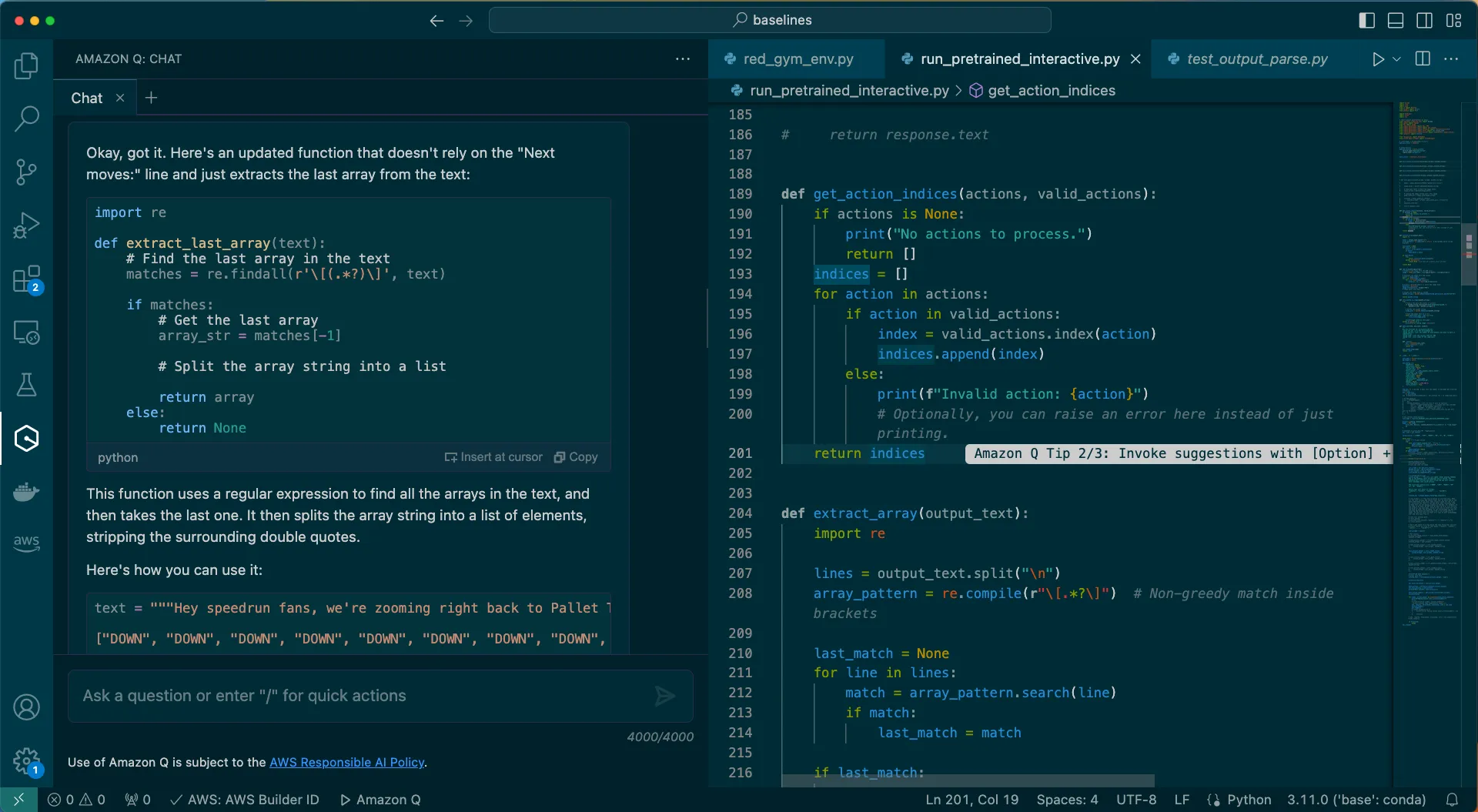

Can you write a function giving two arrays return the indexes if they are matching

e.g

next_moves = ["DOWN", "DOWN", "DOWN"]

valid_actions = ["DOWN", "LEFT", "RIGHT", "UP", "A", "B", "START"]

values = get_action_indices(next_moves, valid_actions)

# values = [0,0,0]

- Spatial Reasoning in LLMs: One of the most significant takeaways from this experiment is the current limitation of LLMs in spatial reasoning. Navigating a game environment involves understanding and interpreting spatial relationships, something these models are not yet adept at.

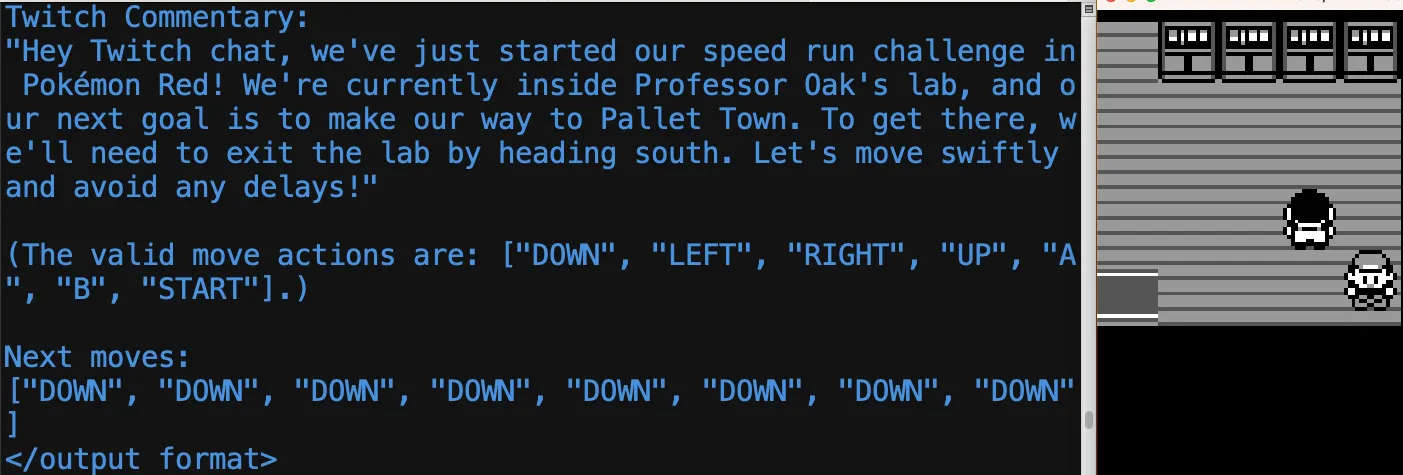

- Prompt Engineering: Changes in the prompt can lead to varying degrees of success, like in my Pokemon Battle experiment updates to the prompt created a win rate from 5 to 50%. However, there is still a lot to learn about optimizing prompts for specific tasks, especially those involving visual inputs.

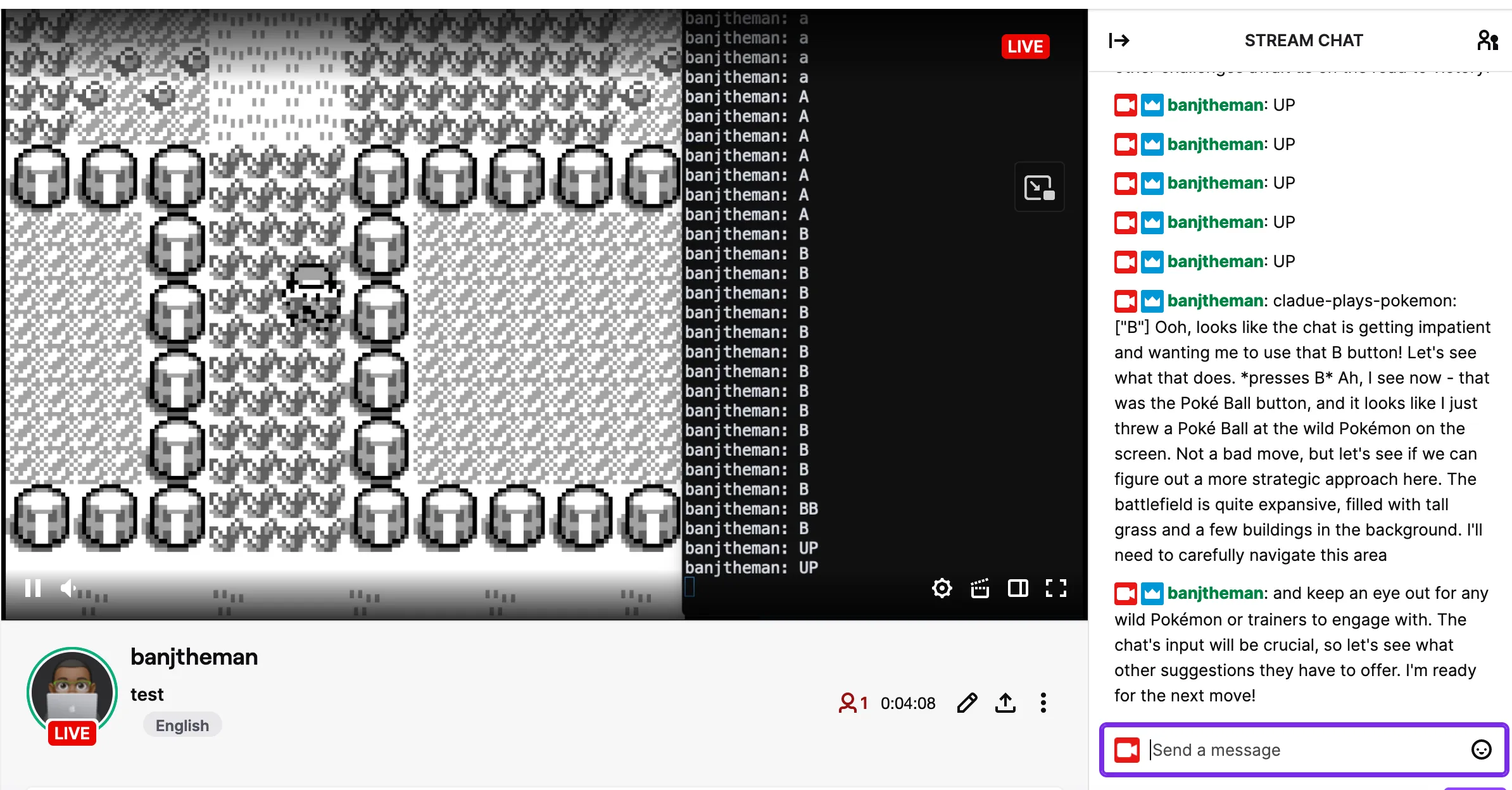

- Human-AI Collaboration: Combining human input with LLM processing can lead to better outcomes. The Twitch integration demonstrated that while the LLM might struggle with certain tasks independently, it can enhance its actions by processing and executing commands from human input. Amazon Bedrock Agents recently added the "return to control" feature to support this.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.